Abstract

In this work, we illustrate the implementation of physics informed neural networks (PINNs) for solving forward and inverse problems in structural vibration. Physics informed deep learning has lately proven to be a powerful tool for the solution and data-driven discovery of physical systems governed by differential equations. In spite of the popularity of PINNs, their application in structural vibrations is limited. This motivates the extension of the application of PINNs in yet another new domain and leverages from the available knowledge in the form of governing physical laws. On investigating the performance of conventional PINNs in vibrations, it is mostly found that it suffers from a very recently pointed out similar scaling or regularization issue, leading to inaccurate predictions. It is thereby demonstrated that a simple strategy of modifying the loss function helps to combat the situation and enhance the approximation accuracy significantly without adding any extra computational cost. In addition to the above two contributing factors of this work, the implementation of the conventional and modified PINNs is performed in the MATLAB environment owing to its recently developed rich deep learning library. Since all the developments of PINNs till date is Python based, this is expected to diversify the field and reach out to greater scientific audience who are more proficient in MATLAB but are interested to explore the prospect of deep learning in computational science and engineering. As a bonus, complete executable codes of all four representative (both forward and inverse) problems in structural vibrations have been provided along with their line-by-line lucid explanation and well-interpreted results for better understanding.

Introduction

Deep learning (DL) has recently emerged as an incredibly successful tool for solving ordinary differential equations (ODEs) and partial differential equations (PDEs). One of the major reasons for the popularity of DL as an alternative ODE/PDE solver which may be attributed to the exploitation of the recent developments in automatic differentiation (AD) [1] and high-performance computing open-source softwares such as TensorFlow [2], PyTorch [3] and Keras [4]. This led to the development of a simple, general and potent class of forward ODE/PDE solvers and also novel data-driven methods for model inversion and identification, referred to as physics-informed machine learning or more specifically, physics-informed neural networks (PINNs) [5, 6]. Although PINNs have been applied to diverse range of problems in disciplines [7–9], not limited to fluid mechanics, computational biology, optics, geophysics, quantum mechanics, its application in structural vibrations has been observed to be limited and is gaining attention recently [10–15].

The architecture of PINNs can be customized to comply with any symmetries, invariance, or conservation principles originating from the governing physical laws modelled by time-dependant and nonlinear ODEs and PDEs. This feature make PINNs an ideal platform to incorporate this domain of knowledge in the form of soft constraints so that this prior information can act as a regularization mechanism to effectively explore and exploit the space of feasible solutions. Due to the above features and generalized framework of PINNs, they are expected to be as suitable in structural vibration problems as in any other applications of computational physics. Therefore, in this paper, we investigate the performance of conventional PINNs for solving forward and inverse problems in structural vibrations. Then, it is shown that with the modification of the loss function, the scaling or regularization issue which is an inherent drawback of first generation PINNs referred to as “gradient pathology” [16], significant improvement in approximation accuracy can be achieved. One important thing about the above strategy is that it does not require any additional training points to be generated and hence does not contribute to the computational cost. Moreover, since all of the implementation of PINNs is performed in Python, this work explores MATLAB environment for the first time. This is possible due to the new development of the DL library and AD built-in routines in MATLAB. The solution and identification of four representative structural vibration problems have been carried out using PINNs. We also provide complete executable MATLAB codes for all the examples and their line-by-line explanation for easy reproduction. This is expected to serve a large section of engineering community interested in the application of DL in structural mechanics or other fields and are more proficient and comfortable in MATLAB. Special emphasis has also been provided to present a generalized code so that all the recent improvements in PINNs architecture and its variants (otherwise coded in Python) can be easily reproduced using our present implementation.

Formulation of Physics-Informed Neural Networks

One of the major challenges PINNs circumvent is the overdependence of data-centric deep neural networks (DNN) on training data. This is especially useful as sufficient information in the form of data is often not available for physical systems. The basic concept of PINNs is to evaluate hyperparameters of the DNN by making use of the governing physics and encoding this prior information within the architecture in the form of the ODE/PDE. As a result of the soft constraining, it ensures the conservation of the physical laws modelled by the governing equation, initial and boundary conditions and available measurements.

Considering the PDE for the solution

parameterized by system parameters

defined in the domain

with the following initial

and boundary conditions

, respectively, as,

here

and

represent the time and spatial coordinates, respectively and

is the boundary of

. For solving the PDE via PINNs, the solution

is approximated by constructing a neural network

to yield

such that

, where

denotes a concise representation of all the trainable parameters. The trainable parameters (denoted as

for tractability) consist of weight matrices and bias vectors. These matrices and vector components of a neural network are randomly initialized and are optimized by minimising the loss function during the training process. Hereafter, the training strategy of PINNs to be followed has been illustrated point-wise.

A set of collocation points inside the domain is generated using a suitable experimental design scheme. Another set of points is to be generated individually on the boundary and corresponding to the initial conditions .

The loss function that penalizes the PDE residual is formulated based on the generated interior collocation points as,

Note that the derivatives

in Eq.

3are computed using AD.

The loss functions that ensure the satisfaction of the boundary and initial conditions , respectively are defined as,

The composite loss function is defined as the sum of the above individual loss terms, namely, the loss of the PDE residual , boundary and initial conditions .

The final goal is to compute the parameters by minimizing the loss function in Eq. 6 as shown below and construct a DNN representation.

Usually, is minimized using the stochastic gradient descent method. Once the PINNs model is constructed, it can be used to predict the system response at unknown input .

Despite immense success, the plain vanilla version of PINNs (as discussed above) has been often criticized for not performing well even for simple problems. This is due to the regularization of the composite loss term as defined in Eq. 6. In particular, the individual loss functions , and are of widely varying scales leading to gradient imbalances between the loss terms and eventually resulting in an inaccurate representation of the PDE solution. This issue has been recently analyzed in detail [16]. Since manual tuning to vary the importance of each term can be tedious, numerous studies on multi-objective optimization have been undertaken which allow adaptive/automatic scaling of each term in the loss function, including the popular weighted sum approach. A scaling approach was proposed by Wang et al. [16] for PINNs based on balancing the distribution of gradients of each term in the loss function. Although their approach proved to be effective, it entails extra computational effort.

Alternatively, we employ a different approach to address the scaling issue and at the same time requires no extra computational effort. To avoid multiple terms in the composite loss function, the DNN output is modified to so that the PDE residual, initial and/or, boundary conditions are satisfied simultaneously . The determination of mapping function involves simple manipulation of the expression of DNN approximated solution which has been illustrated later in the numerical examples section. In presence of the modified neural network output , the new loss function can be expressed as,

Note that the new loss function only involves the PDE residual of the modified output in the domain and still satisfies the associated boundary and/or, initial conditions by avoiding their corresponding loss terms. This is only possible due to the modified DNN output. Likewise, the derivatives in Eq. 8 are computed using AD.

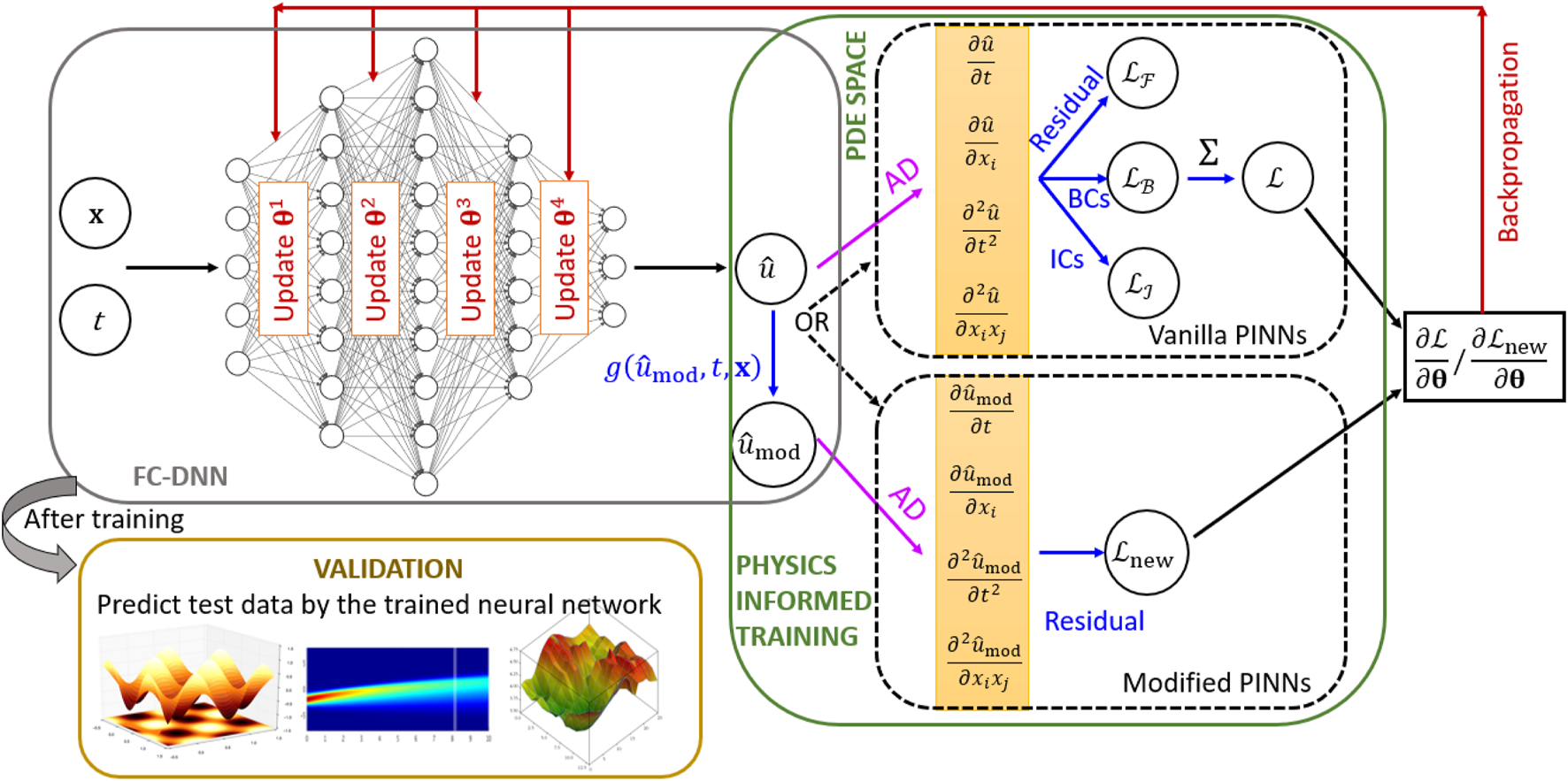

Next, a flow diagram of the PINNs architecture is presented in Figure 1 for further clarity. This depicts the encoding of the PDE physics in the form of soft constraints within the DNN as illustrated by the physics informed training block in the right side of the diagram. For generality, the flow diagram consists of both training strategies adopted for conventional (vanilla) and modified PINNs. Later, with the help of numerical examples, it is illustrated that the modified PINNs alleviates the scaling issue and leads to better approximation without generating any extra sampling points. As the physics will change from problem to problem depending on the ICs and BCs, the mapping function will have to be determined separately for every problem. Once determined, it can be implemented with minimal effort and has been demonstrated in the next section. It is worth noting that no computations are performed on the actual system (i.e., any response/output data is not required) during the entire training phase of PINNs for capturing the forward solution and hence, is a simulation free ODE/PDE solver.

FIGURE 1

A schematic flow diagram of physics informed neural networks (PINNs). In the figure, the abbreviations FC-DNN, PDE, AD, BCs and ICs represent fully connected deep neural network, partial differential equation, automatic differentiation, boundary conditions and initial conditions, respectively. All of the symbols used here to express the mathematical quantities are explained in Formulation of Physics-Informed Neural Networks section.

One useful feature of PINNs is that the same framework can be employed for solving inverse problems with a slight modification of the loss function. The necessary modification is discussed next. If the parameter in Eq. 1 is not known, and instead set of measurements of response is available, then an additional loss term minimizing the discrepancy between the measurements and the neural network output can be defined as,

This term determines the unknown parameters along with the solution. Thus, the combined loss term is expressed as,

Lastly, the parameters are computed by minimizing the loss function in Eq. 10 as shown below.

MATLAB Implementation of PINNs

In this section, the implementation of PINNs in MATLAB has been presented following its theoretical formulation discussed in the previous section. A step-wise explanatory approach has been adopted for better understanding of the readers and care has been taken to maintain the code as generalized as possible so that others can easily edit only the necessary portions of the code for their purpose. The complete code has been divided into several sub-parts and each of these are explained in detail separately for the solution of forward and inverse problems.

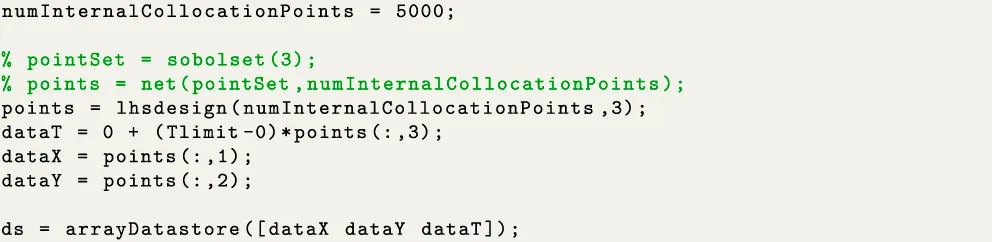

Input Data Generation

The first part is the input data generation. For the conventional PINNs, points have to be generated 1) in the interior of domain to satisfy the PDE residual, 2) on the boundary of domain to satisfy the boundary conditions, and 3) additional points to satisfy the initial conditions. However, in the modified approach, since the output is adapted so as to satisfy all of the conditions simultaneously, only the interior points are required to be generated. The part of the code generating the interior data points by Latin hypercube sampling has been illustrated in the following snippet.

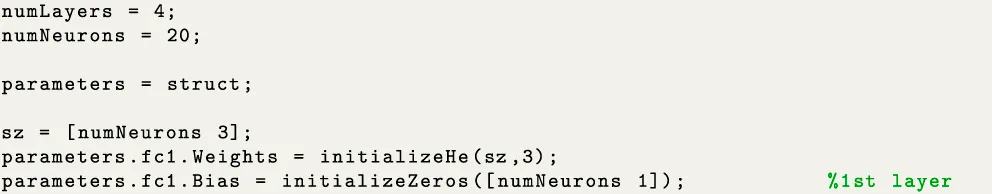

Initialization of Network Parameters

Next, the fully connected deep neural net architecture is constructed according to the user-defined number of layers “numLayers” and number of neurons per layer “numNeurons.” The trainable parameters (weights and biases) for every layer is initialized and stored in the fields of a structure array called “parameters.” The instance of initializing the weights and biases of the first fully connected layer has been captured by the following snippet. Here, the network weights are initialized by the He initialization [17] implemented by the function “initializeHe.” The He initializer samples the weights out of a normal distribution with zero mean and variance , where is the number of input channels. This function “initializeHe” takes in two input arguments, one is the size of trainable parameters “sz”and the other is “” and returns the sampled weights as a “dlarray” object. Note that “dlarray” is a built-in deep learning array in MATLAB employed for customizing the training process of DNNs. It enables numerous numerical operations including the computation of derivatives through AD. The network biases have been initialized by the zeros initialization implemented by the function “initializeZeros.” As it is evident that the initialization schemes can be easily customized, other initializers like Glorot or Xavier, Gaussian, orthogonal and others can be readily employed depending on the model type and choice of the user. In fact, a wide variety of initialization schemes of trainable parameters for various type of DNNs can be found in the MATLAB documentation.1

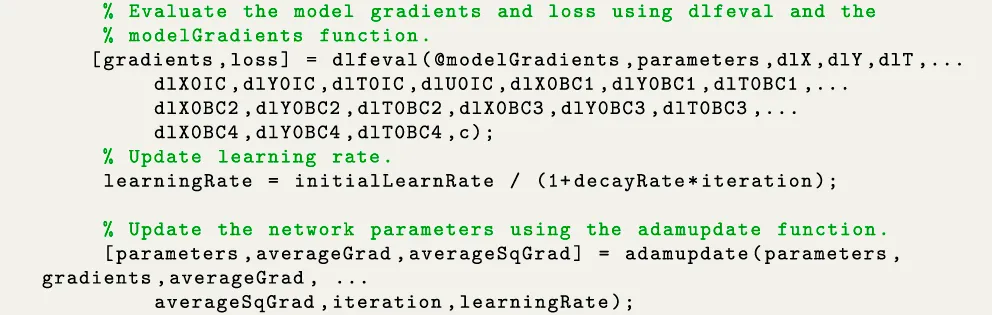

Neural Network Training

At this stage, the network is to be trained with user-specified value of parameters like, number of epochs, initial learning, decay rate along with several other tuning options. It is worth noting that multiple facilities to allocate hardware resources are available in MATLAB for training the network in an optimal computational cost. This include using CPU, GPU, multi GPU, parallel (local or remote) and cloud computing. The steps performed during the model training within the nested loops of epoch and iteration in mini-batches have been illustrated in the following snippet. To recall, an epoch is the full pass of the training algorithm over the entire training set and an iteration is one step of the gradient descent algorithm towards minimizing the loss function using a mini-batch. As it can be observed from the snippet that three operations are involved during the model training. These are 1) evaluating the model gradients and loss using “dlfeval”2 by calling the function “modelGradients” (which is explained in the next snippet), 2) updating the learning rate with every iteration and epoch and 3) finally updating the network parameters during the backpropagation using adaptive moment estimation (ADAM) [18]. In addition to ADAM, other stochastic gradient descent algorithms like, stochastic gradient descent with momentum (SGDM) and root mean square propagation (RMSProp) can be readily implemented via their built-in MATLAB routines.

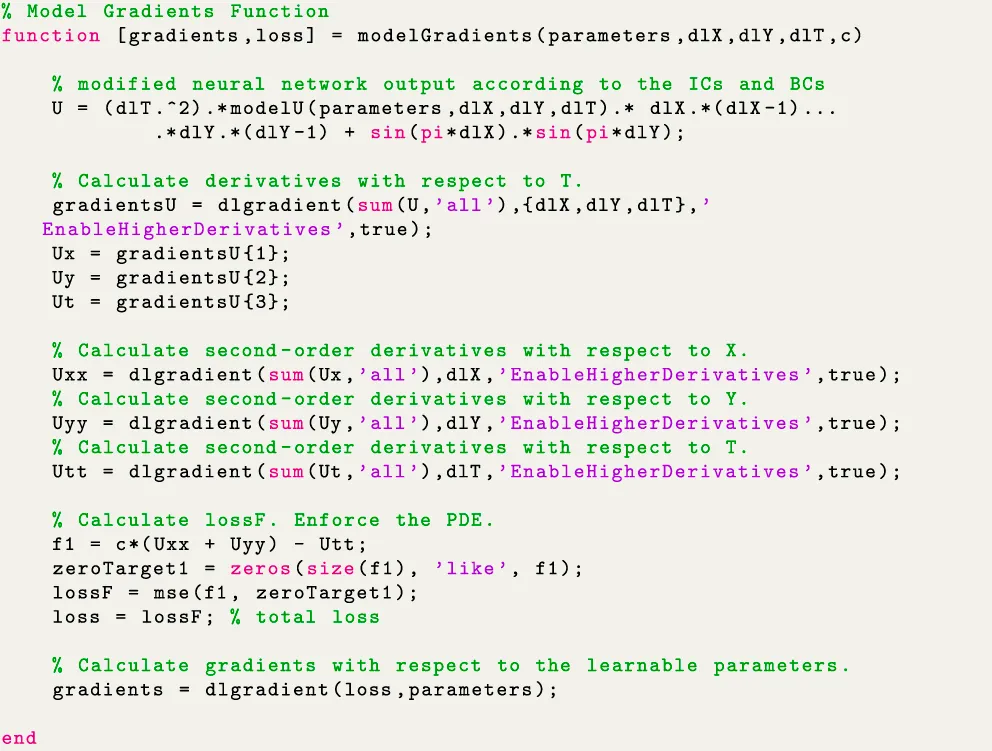

Encoding the Physics in the Loss Function

The next snippet presents the function “modelGradients.” This sub-routine is the distinctive feature of PINNs where the physics of the problem is encoded in the loss functions. As mentioned previously, in conventional PINNs, the system response is assumed to be a DNN such that U=modelU(parameters,dlX,dlY,dlT). A difference to the expression of U can be observed in this snippet where the DNN output is modified based on the ICs and BCs. As obvious, this modification will change from problem to problem. In this case, the expression is shown for illustration and is related to Eq. 33 of Example 4 defined in the next section. As the name “dlgradient” suggests, it is used to compute the derivatives via AD. After evaluating the gradients, the loss term enforcing the PDE residual is computed.

As the modified DNN output ensures the satisfaction of ICs and BCs, only the loss term corresponding to PDE residual is necessary. Instead, if conventional PINNs was used, separate loss terms originating from the ICs and BCs would have to be added to the residual loss. Finally, the gradients of the combined loss w.r.t. the network parameters are computed and passed as the function output. These gradients are further used during backpropagation.

As obvious, there will be another loss term involved while solving an inverse problem which minimizes the discrepancy between the model prediction and the measured data. The parameter to be identified is updated as another additional hyperparameter of the DNN along with the network weights and biases. This can be easily implemented by adding the following line: c_update = parameters.(“fc” + numLayers).opt_param; and evaluating the PDE residual as f1 = c_update*(Uxx + Uyy) - Utt in Example 4. In doing so, note that c in line 22 of the snippet will be replaced by c_update.

Fully Connect Operations

The “modelU” function has been illustrated in the next snippet. Here, the fully connected deep neural network (FC-DNN) model is constructed as per the dimensionality of input and network parameters. In particular, the fully connect operations are performed via “fullyconnect.” This function uses the weighted sum to connect all the inputs to each output feature using the “weights,” and adds a “bias.” Sinusoidal activation function has been used here. The sub-routine returns the weighted output features as a dlarray “dlU” having the same underlying data type as the input “dlXYT.”

Once the PINNs model is trained, it can be used to predict on the test dataset. It is worth noting that the deep learning library of MATLAB is rich and consists of a diverse range of built-in functions, providing the users adequate choice and modelling freedom. In the next section, the performance of conventional and modified PINNs is accessed for solving four representative structural vibration problems, involving solution of ODE including multi-DOF systems, and PDE. In doing so, both forward and inverse problems have been addressed. Complete executable MATLAB codes of PINNs implementation for all the example problems can be found in the Supplementary Material.

Numerical Examples

Forced Vibration of an Undamped Spring-Mass System

The forced vibration of the spring-mass system can be expressed bywhere u, ü, ωn, fn, ω and t represent displacement, acceleration, natural frequency, forcing amplitude, forcing frequency and time, respectively. The initial conditions are u(t = 0) = 0 and ü (t = 0) = 0, where ü represents the velocity. The analytical solution to the above system is given by

where, r = ω/ωn is the frequency ratio.

As mentioned previously, in the realm of the PINNs framework, solution space (of the ODE, for this case) can be approximated by DNN such that , where the residual of ODE is evaluated with the help of AD. Essentially, this is an optimization problem which can be expressed as,where, denotes -norm. For the numerical illustration, it is assumed that , and . The displacement is approximated using a fully-connected neural network with 4 hidden layers and 20 neurons per layer. Sinusoidal activation function has been used due to the known periodic nature of the data [19]. 20,000 collocation points have been generated for time data with the help of Latin hypercube sampling. The neural network is run for 1,000 epochs and the mini-batch size is 1,000. The initial learning rate is assumed to be 0.01 and the popular ADAM optimizer is employed. For testing the PINNs framework, 5,000 points were uniformly generated for time . The solution obtained using the PINNs framework has been compared with the actual (analytical) solution in Figure 2A.

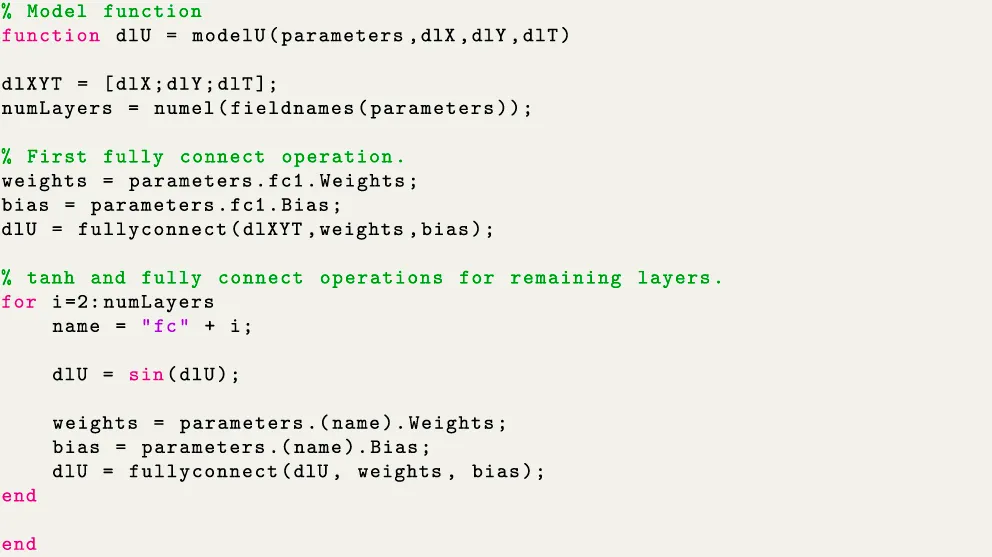

FIGURE 2

Results of the forced spring-mass system (A) Forward solution without modifying the neural network output. (B) Forward solution after modifying the neural network output. (C) Inverse solution in the form of convergence of the identified parameter .

It can be observed from Figure 2A that the conventional PINNs framework is not capable of capturing the time response variation satisfactorily. As discussed in the previous sections, the reason is related to the regularization of the loss term in Eq. 14 and has been recently addressed in [16]. Although their approach proved to be effective, it entails extra computational effort.

Therefore, an alternative approach has been employed in this work to address the scaling issue which requires no additional computational cost compared to that of conventional PINNs. For avoiding multiple terms in the loss function, a simple scheme for modifying the neural network output has been adopted so that the initial and/or, boundary conditions are satisfied. To automatically satisfy the initial conditions in the above problem, the output of the neural network is modified as,

Since the modified neural network output is , the new loss function can be expressed as,

Following this approach, significant improvement in approximation of the displacement response has been achieved as shown in Figure 2B. Next, the implementation of PINNs has been illustrated for an inverse setting. For doing so, the same problem as defined by Eq. 12 is re-formulated such that the displacement time history is given in the form of measurements and the natural frequency has to be identified. The optimization problem can be expressed as,where, represents the measured displacement data. 15,000 collocation points have been generated for time data with the help of Latin hypercube sampling. 2,500 displacement data points were used for artificially simulating the measurement data and 5 uniform random noise was added. The architecture and the parameters of the neural network is the same as the previous case. The results have been presented in the form of convergence of the identified parameter in Figure 2C. The converged value of demonstrates exact match with the actual value. It is worth mentioning that the PINNs framework is inherently adapted to also provide the solution to the ODE along with the identified parameter in the inverse setup. This demonstrates that the PINNs framework can be easily adapted for solving forward and inverse problems in structural vibration.

Forced Vibration of a Damped Spring-Mass System

The second example concerns a forced vibration of a damped spring-mass system and can be expressed bywhere u, , ü, ωn, ζ, f0, ω and t represent displacement, velocity, acceleration, natural frequency, damping ratio, forcing amplitude, forcing frequency and time, respectively. The initial conditions are u(t = 0) = 0 and (t = 0) = 0. The analytical solution to the above system can be found in [20].

As mentioned previously, in the realm of the PINNs framework, solution space (of the ODE, for this case) can be approximated by DNN such that , where the residual of ODE is evaluated with the help of AD. Essentially, this is an optimization problem which can be expressed as,where, denotes -norm. For the numerical illustration, it is assumed that , , and . The displacement is approximated using a fully-connected neural network with 4 hidden layers and 20 neurons per layer. Sinusoidal activation function has been used due to the known periodic nature of the data [19]. The neural network is run for 1,000 epochs and the mini-batch size is 1,000. The initial learning rate is assumed to be 0.01 and the popular ADAM optimizer is employed. The solution obtained using the PINNs framework has been compared with the actual (analytical) solution in Figure 3A. 20,000 collocation points have been generated for time data with the help of Latin hypercube sampling to obtain the results in Figures 3A, B. For testing the PINNs framework, 5,000 points were uniformly generated for time to obtain the results in Figures 3A, B.

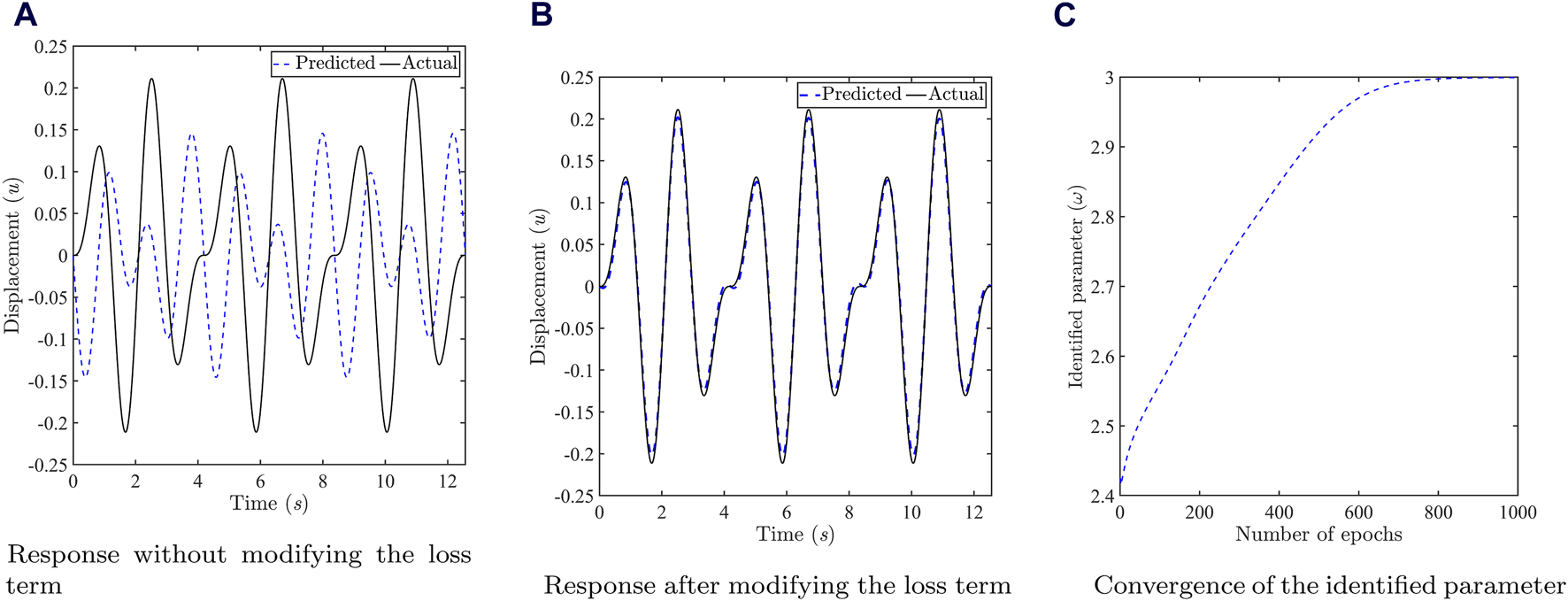

FIGURE 3

Results of the damped forced spring-mass system (A) Forward solution without modifying the neural network output. (B) Forward solution after modifying the neural network output. (C) Forward solution over extended time after modifying the neural network output to observe the steady state response (after the transients have died out).

It can be observed from Figure 3A that the conventional PINNs framework is not capable of capturing the time response variation satisfactorily. As discussed in the previous sections, the reason is related to the regularization of the loss term in Eq. 14. Therefore, to automatically satisfy the initial conditions, modified output of the neural network is the same as Eq. 15 as the initial conditions are identical to that of the first example. Therefore, the new loss function can be expressed as,

Following this approach, significant improvement in approximation of the displacement response has been achieved as shown in Figure 3B. The displacement response is presented over extended time in Figure 3C so as to investigate the performance of PINNs on the steady state response after the transients have died out. For generating the result in Figure 3C, 60,000 collocation points have been generated for the time data for training the network. For testing the PINNs framework, 40,000 points were uniformly generated for time . The approximation by PINNs is found to be excellent in terms of capturing the response trends.

Next, the implementation of PINNs has been illustrated for an inverse setting. For doing so, the same problem as defined by Eq. 18 is re-formulated such that the displacement time history is given in the form of measurements and both natural frequency and damping ratio have to be identified simultaneously. The optimization problem can be expressed as,where, represents the measured displacement data. 10,000 collocation points have been generated for time data with the help of Latin hypercube sampling. 1,000 displacement data points were used for artificially simulating the measurement data and 1 uniform random noise was added. The architecture and the parameters of the neural network is the same as the previous case.

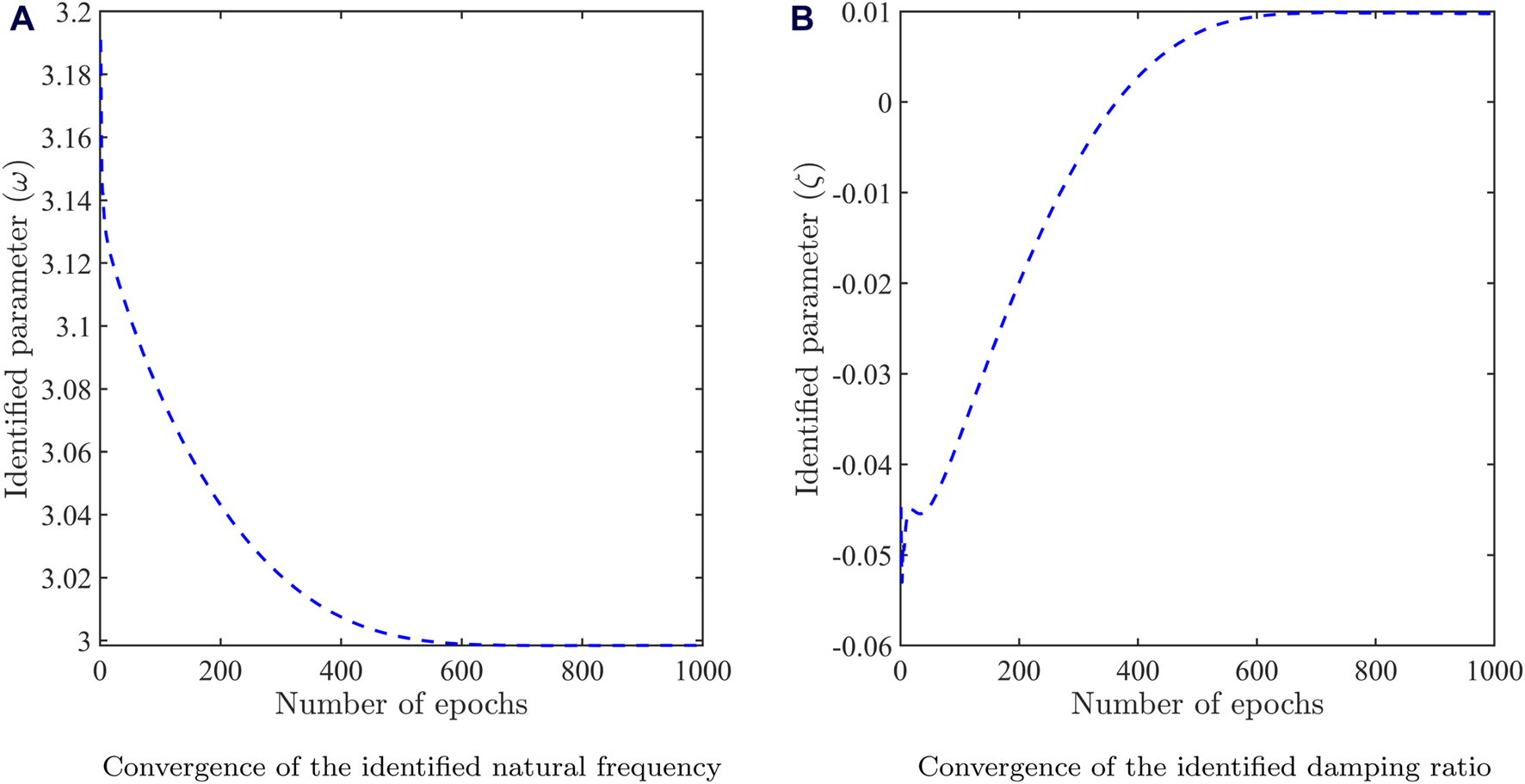

The results have been presented in the form of convergence of the identified parameters (natural frequency and damping ratio) in Figure 4. The converged value of and demonstrate close match with the actual values of 3 and 0.01, respectively. This demonstrates that the PINNs framework can be easily adapted for solving forward and inverse problems in structural vibration.

FIGURE 4

Identification results for the damped forced spring-mass system (A) Convergence of the identified natural frequency. (B) Convergence of the identified damping ratio.

Free Vibration of a 2-DOF Discrete System

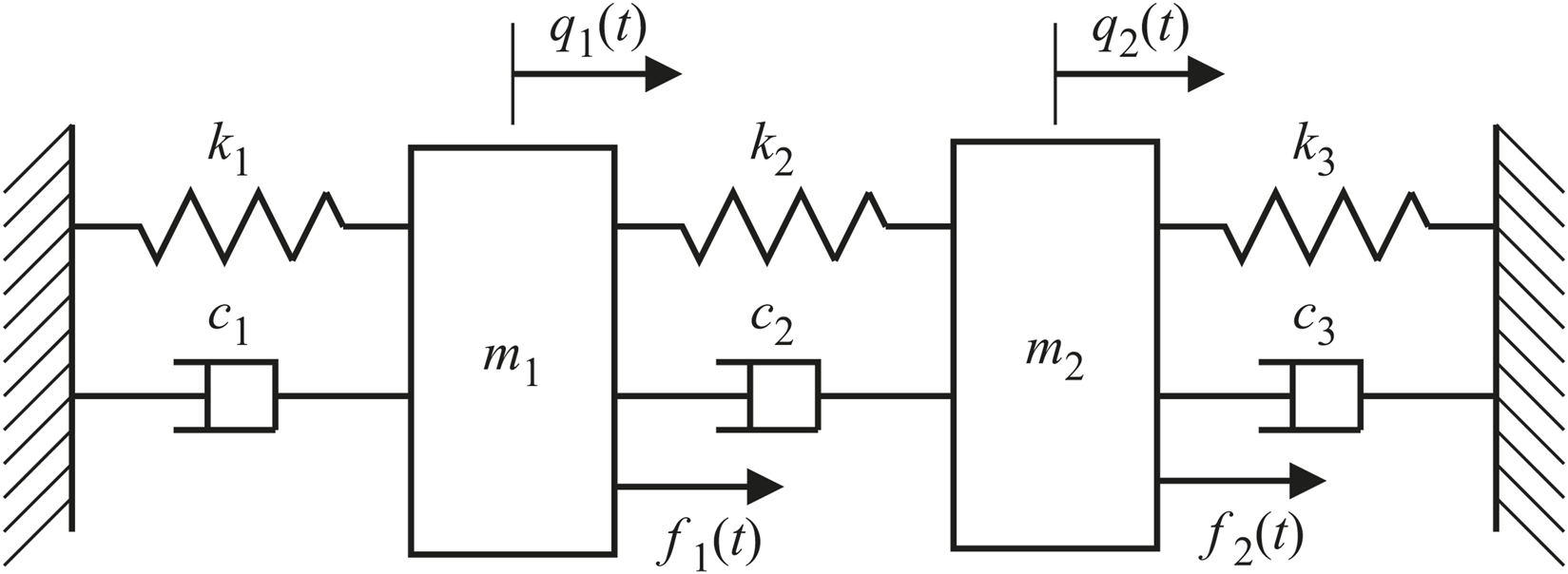

A 2-DOF lumped mass system as shown in Figure 5 is considered in this example [20]. This example has been included to illustrate the application of PINNs in a multi-output setting for the inference and identification of multi degree of freedom systems. The governing ODE and the initial conditions are as follows,with initial conditions and . In Eq. 22, , , represent the displacement, velocity, acceleration of the DOF, respectively, and represent the mass of the DOF and force acting at the DOF, respectively, and are the damping and stiffness coefficient of the connecting element, respectively. For this 2-DOF system, and . Since the free vibration problem has been undertaken, the right hand side of Eq. 22 is zero. Two cases of the free vibration problem have been considered, undamped and damped. For each of these cases, both forward and inverse formulations have been presented. The analytical solution to the above governing ODE considering undamped and damped cases, respectively, can be determined as,where, constants and have to be determined from the given initial conditions. represents the number of DOFs, therefore for the above system. and are the undamped natural frequency and mode shape vector, respectively, obtained from the modal analysis. In Eq. 24, and represent the damping ratio and damped natural frequency, respectively.

FIGURE 5

A schematic representation of the 2-DOF lumped mass system.

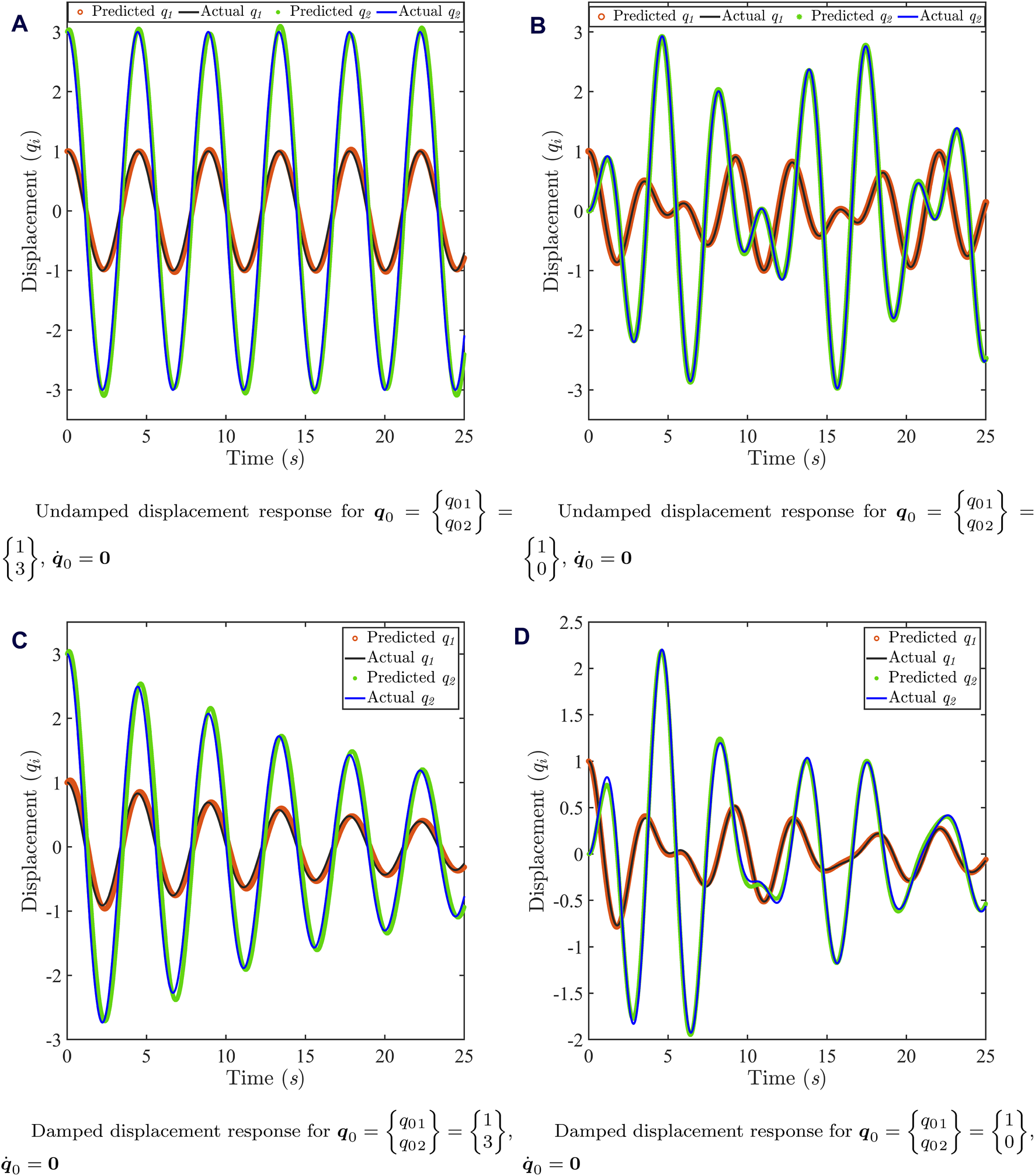

As opposed to the previous examples, in general, the response associated with each DOF has to be represented by an output node of (multi-output) FC-DNN. Since the above example is a 2-DOF system, the response of the two DOFs are represented by two output nodes of an FC-DNN in the realm of PINNs architecture such that . The optimization problem can be expressed as,where, the gradients arising in Eq. 25 can be computed by AD. The following parameter values are adopted, , , , , , , , . An FC-DNN with 4 hidden layers and 20 neurons per layer is used. Sinusoidal activation function has been used due to the known periodic nature of the data. The neural network is run for 1,000 epochs and the mini-batch size is 1,000. The initial learning rate is assumed to be 0.01 and the popular ADAM optimizer is employed. Collocation points have been generated for time data with the help of Latin hypercube sampling to obtain the results in Figures 6–8. For testing the conventional PINNs framework, 10,000 points were uniformly generated for time to obtain the results in Figure 6. The undamped and damped time response obtained using conventional PINNs framework have been compared with the actual (analytical) solution in Figure 6.

FIGURE 6

Results of free vibration of the 2-DOF lumped mass system. (A) Undamped response for IC , . The predicted responses have been obtained using 60,000 collocation points. (B) Undamped response for IC , . The predicted responses have been obtained using 100,000 collocation points. (C) Damped response for IC , . The predicted responses have been obtained using 40,000 collocation points. (D) Damped response for IC , . The predicted responses have been obtained using 120,000 collocation points.

It can be observed from Figure 6 that the conventional PINNs framework is capable of capturing the undamped and damped time response variation satisfactorily for two different ICs. The IC , is adopted so that the system vibrates with the first natural frequency only as shown in Figures 6A, C, whereas the IC , is a more general one resulting in a multi-frequency response as shown in Figures 6B, D. It is worth mentioning that the beat phenomenon exists in the free response of the above 2-DOF system due to close proximity of the two natural frequencies ( and ).

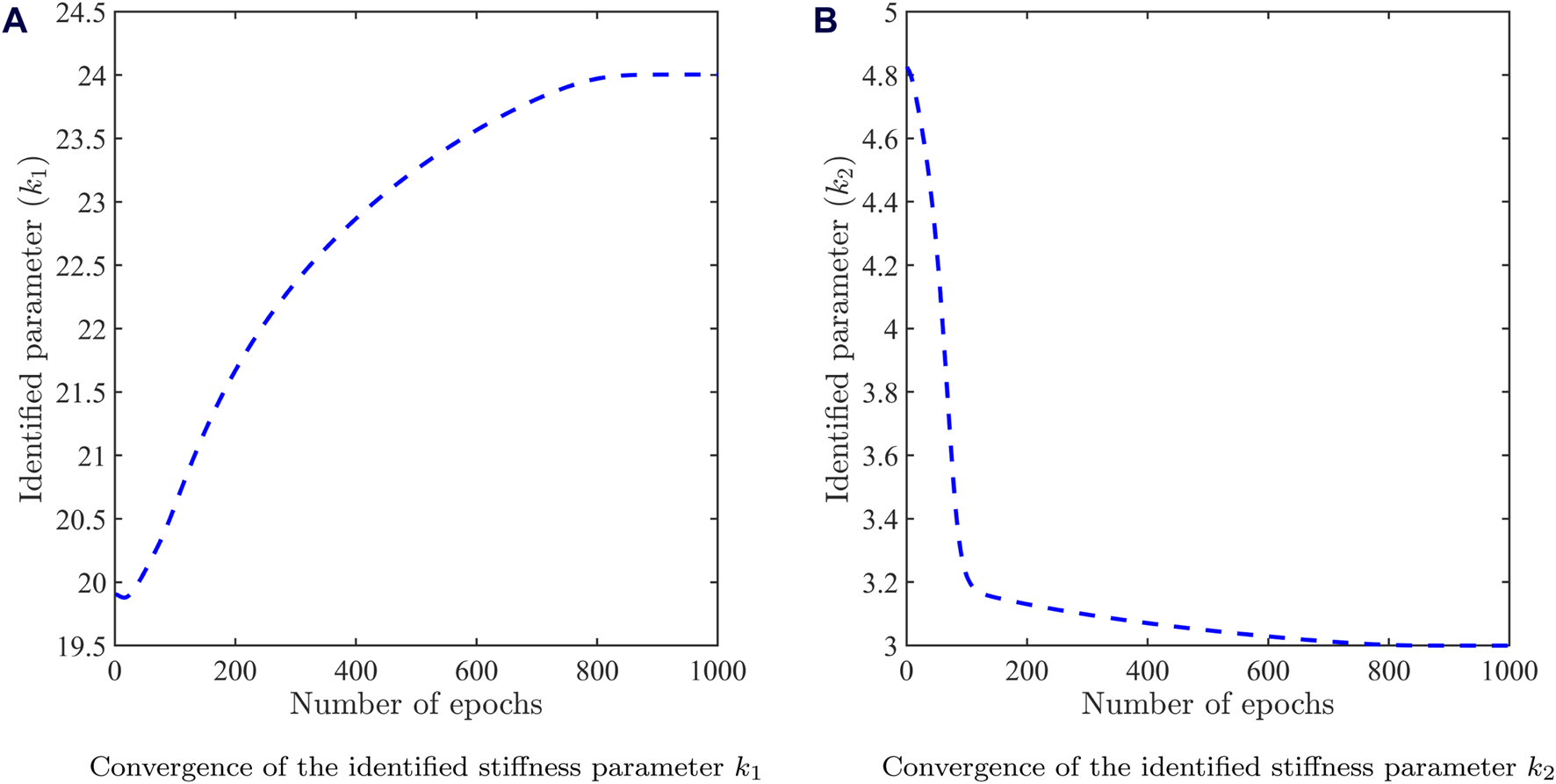

Next, PINNs has been implemented in an inverse setup for identification of system parameters both for the undamped and damped cases. For doing so, the same problem as defined by Eq. 22 is re-formulated such that the displacement time history data is available in the form of measurements and stiffness parameters ( and ) for the undamped case and stiffness and damping parameters (, , and ) for the damped case, have to be identified simultaneously. The optimization problem for the undamped and damped case, respectively, can be expressed as,where, represents the measured displacement data. Collocation points have been generated for time data with the help of Latin hypercube sampling. 2,000 displacement data points were used for artificially simulating the measurement data and 1% uniform random noise was added. The architecture and the parameters of the neural network is the same as the forward formulation. The results have been presented in the form of convergence of identified system parameters in Figures 7, 8 for the undamped and damped case, respectively.

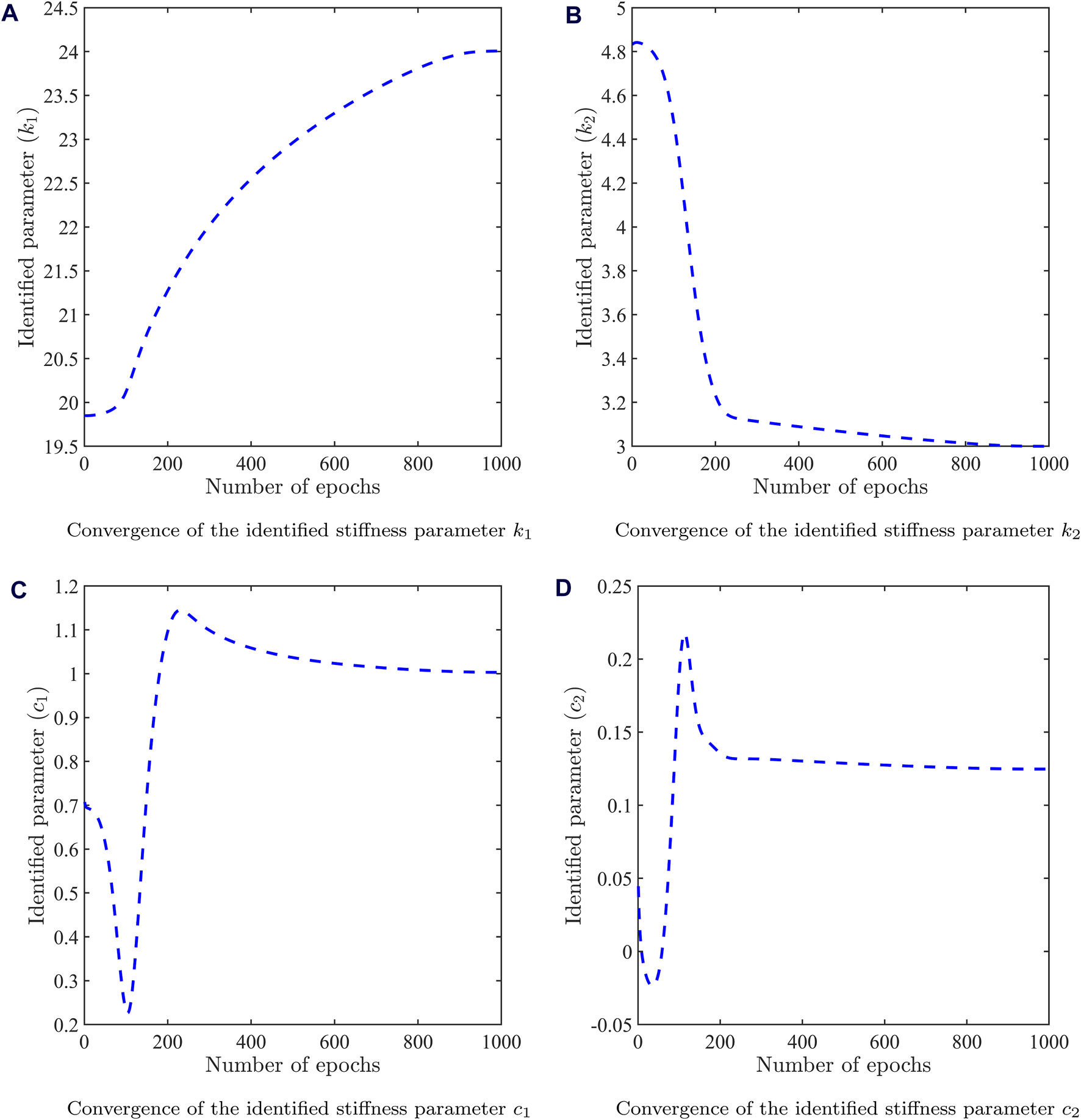

FIGURE 7

Identification results for the undamped 2-DOF system (A) Convergence of the identified stiffness parameter . (B) Convergence of the identified stiffness parameter . For obtaining these results, 45,000 collocation points and 2,000 data points with 1% random uniform noise were used to train the PINNs model.

FIGURE 8

Identification results for the damped 2-DOF system (A) Convergence of the identified stiffness parameter , (B) Convergence of the identified stiffness parameter , (C) Convergence of the identified damping parameter , (D) Convergence of the identified damping parameter . For obtaining these results, 70,000 collocation points and 2000 data points with 1% random uniform noise were used to train the PINNs model.

The converged values of and have been obtained from Figure 7 for the undamped case. The converged values of , , and have been obtained from Figure 8 for the damped case. The converged values of identified system parameters demonstrate close match with the actual values , , and . This demonstrates that the PINNs framework can be easily adapted for solving forward and inverse problems in multi-DOF systems. In addition to the adopted strategy to employ a single two-output FC-DNN to solve a 2-DOF system, two individual single output FC-DNNs were investigated. However, the latter failed to map the time response accurately due to the inability of two independent networks to adequately capture the dependencies of the coupled differential equations and hence, minimize the loss.

Free Vibration of a Rectangular Membrane

A rectangular membrane with unit dimensions excited by an initial displacement has been considered in this example. The governing partial differential equation (PDE), initial and boundary conditions can be expressed aswhere, is the displacement and is the velocity of wave propagation. In Eqs 28–31, , represent the spatial coordinates, represents time and denotes the spatial domain. The analytical solution to the governing PDE is .

Using the PINNs framework, solution of the PDE is approximated by a DNN such that , where residual of the PDE is evaluated with the help of AD. The optimization problem can be expressed as,

The displacement is approximated using a fully-connected neural network with 4 hidden layers and 20 neurons per layer. Sinusoidal activation function has been used. 5,000 collocation points are generated for the spatial and temporal data with the help of Latin hypercube sampling. The neural network is run for 1,000 epochs and the mini-batch size is 1,000. The initial learning rate is assumed to be 0.01 and the popular ADAM optimizer is employed. For testing the PINNs framework, 1,000 points were uniformly generated for and . The solution in space obtained using the PINNs framework (Figure 9B) has been compared with the actual (analytical) solution (Figure 9A) for four different time instants and 0.25.

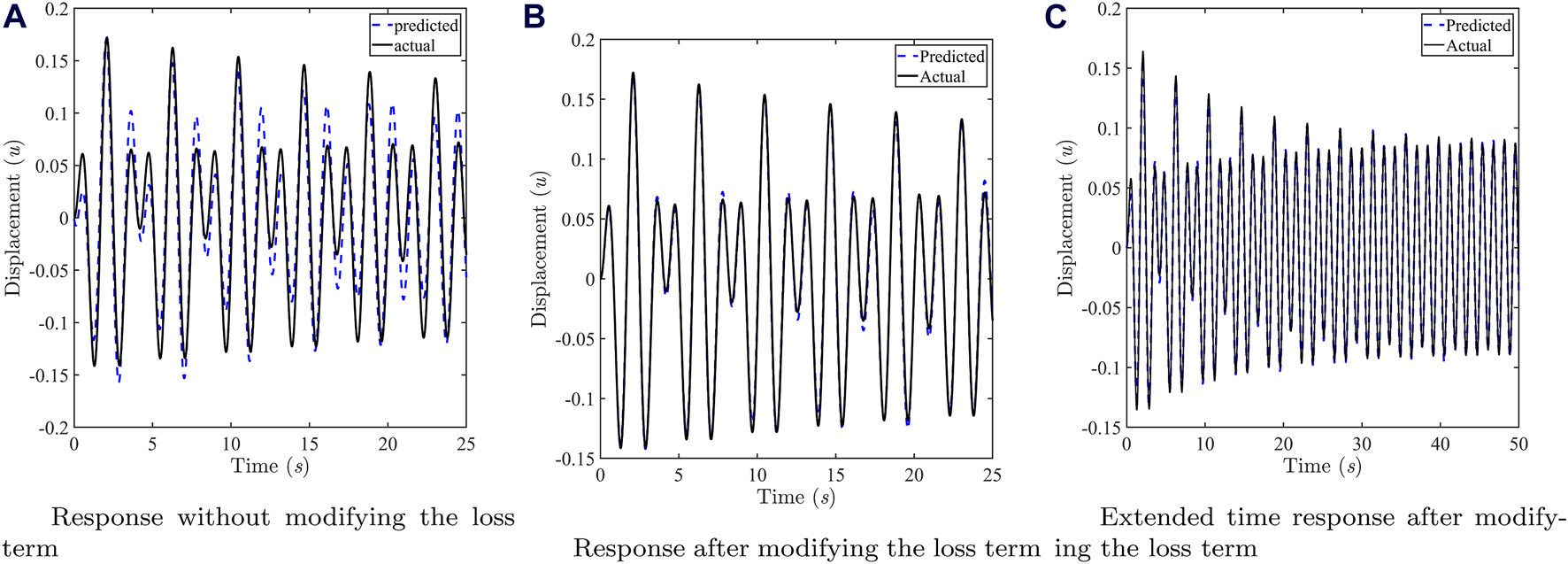

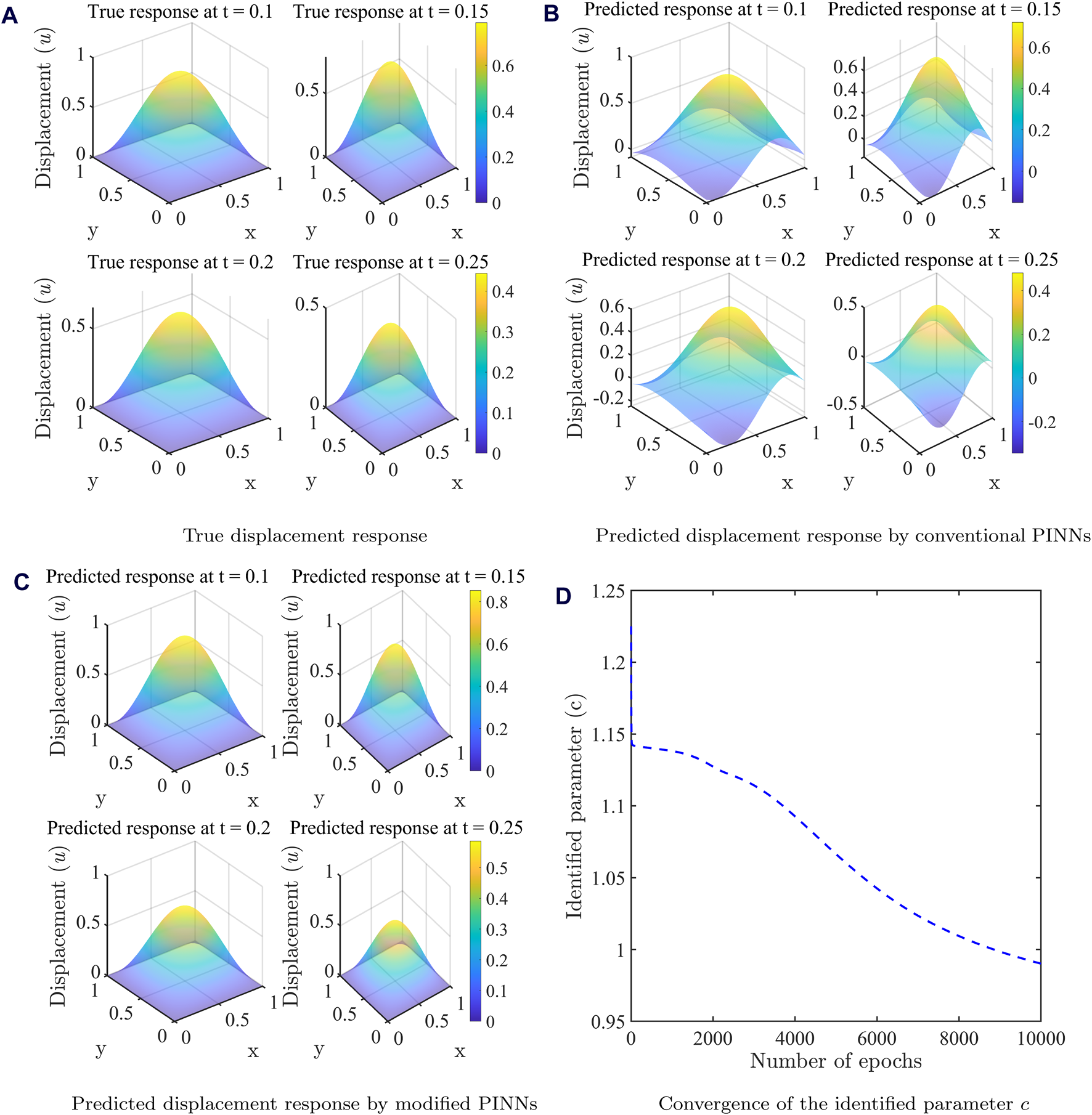

FIGURE 9

Results of free vibration of the rectangular membrane (A) True forward spatial solution, (B) Predicted forward spatial solution by conventional PINNs, (C) Predicted forward spatial solution by modified PINNs, (D) Inverse solution in the form of convergence of the identified parameter .

It can be observed from Figure 9B that the conventional PINNs framework is not capable of capturing the time response variation satisfactorily. The reason is once again related to the regularization of the loss term in Eq. 32. The different terms related to the residual, initial and boundary conditions in the loss function are not satisfied simultaneously. Specifically, the fact that the condition at the boundary of the domain not being satisfied in the predicted response by conventional PINNs can be visualized from Figure 9B.

To ensure the satisfaction of residual, initial and boundary conditions and improve upon the approximation accuracy, the neural network output has been modified as,

Since the modified neural network output is , the new optimization problem can be expressed as,

Following this modified PINNs approach, significant improvement in the spatial distribution of the displacement response has been achieved as shown in Figure 9C. Next, the implementation of PINNs has been illustrated in solving another inverse problem. For doing so, the same problem as defined by Eqs 28–31 is re-formulated such that the displacement time history is given in the form of measurements and the wave velocity has to be identified. The optimization problem can be expressed as,where, represents the measured displacement data. 25,000 collocation points have been generated for spatial coordinates and time with the help of Latin hypercube sampling. 5,000 displacement data points were used for artificially simulating the measurement data and 2 uniform random noise was added. The architecture and the parameters of the neural network is the same as for the forward problem. The results have been presented in the form of convergence of the identified parameter at the end of 10,000 epochs in Figure 9D. The converged value of demonstrates good match with the actual value . It is worth noting that the PINNs framework is inherently adapted to also provide the solution to the PDE along with the identified parameter in the inverse setup. This demonstrates that the PINNs framework can be easily adapted for solving forward and inverse problems in structural vibration.

Summary and Conclusion

This work presents the MATLAB implementation of PINNs for solving forward and inverse problems in structural vibrations. The contribution of the study lies in the following:

1. It is one of the very few applications of PINNs in structural vibrations till date and thus aims to fill-up the gap. This also makes the work timely in nature.

2. It demonstrates a critical drawback of the first generation PINNs while solving vibration problems, which leads to inaccurate predictions.

3. It mostly addresses the above drawback with the help of a simple modification in the PINNs framework without adding any extra computational cost. This results in significant improvement in the approximation accuracy.

4. The implementation of conventional and modified PINNs is performed in MATLAB. As per the authors’ knowledge, this is the first published PINNs code for structural vibrations carried out in MATLAB, which is expected to benefit a wide scientific audience interested in the application of deep learning in computational science and engineering.

5. Complete executable MATLAB codes of all the examples undertaken have been provided along with their line-by-line explanation so that the interested readers can readily implement these codes.

Four representative problems in structural vibrations, involving ODE and PDE have been solved including multi-DOF systems. Both forward and inverse problems have been addressed while solving each of the problems. The results in three examples involving single DOF systems clearly state that the conventional PINNs is incapable of approximating the response due to a regularization issue. The modified PINNs approach addresses the above issue and captures the solution of the ODE/PDE adequately. For the 2-DOF system, the conventional PINNs performs satisfactorily for the inference and identification formulations. It is recommended to employ -output layer neural network to solve -DOF system instead of employing number of individual neural networks which fails to capture the dependencies of the coupled differential equations (physics).

Making the codes public is a humble and timely attempt for expanding the scientific contribution of deep learning in MATLAB, owing to its recently developed rich deep learning library. The research model can be based similar to that of authors adding their Python codes in public repositories like, GitHub. Since the topic is hot, it is expected to quickly populate with the latest developments and improvements, bringing the best to the research community. The authors can envision a huge prospect of their modest research of a recently developed and widely popular method in a new application field and its implementation in a new and more user-friendly software.

Our investigation of the proposed PINNs approach on complex structural dynamic problems, such as beams, plates, and nonlinear oscillators (e.g., cubic stiffness and Van der Pol oscillator), showed opportunities for improvement. To better capture the forward solution and identify unknown parameters in inverse problems, modifications to the proposed approach in this paper are needed. Based on our observation, the need for further systematic investigation has been identified. This aligns with the recent findings in [21]. Future work should focus on automated weight tuning of fully connected neural networks (e.g., [16]), explore physics-informed neural ODEs [11] and symplectic geometry [22].

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author contributions

TC came up with the idea of the work, carried out the analysis and wrote the manuscript. MF, SA, and HK participated in weekly brainstorming sessions, reviewed the results and manuscript. MF secured funding for the work. All authors contributed to the article and approved the submitted version.

Funding

The authors declare that financial support was received for the research, authorship, and/or publication of this article. TC gratefully acknowledges the support of the University of Surrey through the award of a faculty start-up grant. All authors gratefully acknowledge the support of the Engineering and Physical Sciences Research Council through the award of a Programme Grant “Digital Twins for Improved Dynamic Design,” grant number EP/R006768.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontierspartnerships.org/articles/10.3389/arc.2024.13194/full#supplementary-materialhttps://www.frontierspartnerships.org/articles/10.3389/arc.2024.13194/full#supplementary-material

Footnotes

1.^https://uk.mathworks.com/help/deeplearning/ug/initialize-learnable-parameters-for-custom-training-loop.html#mw_f7c2db63-96b5-4a81-813e-ee621c9658ce

2.^Functions passed to ‘dlfeval’are allowed to contain calls to ‘dlgradient’, which compute gradients by using automatic differentiation.

References

1.

BaydinAGPearlmutterBARadulAASiskindJM. Automatic Differentiation in Machine Learning: A Survey. J Machine Learn Res (2017) 18(1):5595–637.

2.

AbadiMAgarwalABarhamPBrevdoEChenZCitroCet alTensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. Software (2016). arXiv preprint: 1603.04467. arxiv.org/abs/1603.04467.Available from: http://tensorflow.org/.

3.

PaszkeAGrossSMassaFLererABradburyJChananGet alPytorch: An Imperative Style, High-Performance Deep Learning Library. In: Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019; December 8–14, 2019; Vancouver, BC. NeurIPS (2019). p. 8024–35.

4.

CholletF. Deep Learning With Python. 1st edn.United States: Manning Publications Co. (2017).

5.

LagarisILikasAFotiadisD. Artificial Neural Networks for Solving Ordinary and Partial Differential Equations. IEEE Trans Neural Networks (1998) 9(5):987–1000. 10.1109/72.712178

6.

RaissiMPerdikarisPKarniadakisG. Physics-Informed Neural Networks: A Deep Learning Framework for Solving Forward and Inverse Problems Involving Non-Linear Partial Differential Equations. J Comput Phys (2019) 378:686–707. 10.1016/j.jcp.2018.10.045

7.

KarniadakisGEKevrekidisIGLuLPerdikarisPWangSYangL. Physics-Informed Machine Learning. Nat Rev Phys (2021) 3(6):422–40. 10.1038/s42254-021-00314-5

8.

XuYKohtzSBoakyeJGardoniPWangP. Physics-Informed Machine Learning for Reliability and Systems Safety Applications: State of the Art and Challenges. Reliability Eng Syst Saf (2023) 230:108900. 10.1016/j.ress.2022.108900

9.

LiHZhangZLiTSiX. A Review on Physics-Informed Data-Driven Remaining Useful Life Prediction: Challenges and Opportunities. Mech Syst Signal Process (2024) 209:111120. 10.1016/j.ymssp.2024.111120

10.

ZhangRLiuYSunH. Physics-Guided Convolutional Neural Network (Phycnn) for Data-Driven Seismic Response Modeling. Eng Structures (2020) 215:110704. 10.1016/j.engstruct.2020.110704

11.

LaiZMylonasCNagarajaiahSChatziE. Structural Identification With Physics-Informed Neural Ordinary Differential Equations. J Sound Vibration (2021) 508:116196. 10.1016/j.jsv.2021.116196

12.

YucesanYAVianaFAManinLMahfoudJ. Adjusting a Torsional Vibration Damper Model With Physics-Informed Neural Networks. Mech Syst Signal Process (2021) 154:107552. 10.1016/j.ymssp.2020.107552

13.

HuYGuoWLongYLiSXuZ. Physics-Informed Deep Neural Networks for Simulating S-Shaped Steel Dampers. Comput and Structures (2022) 267:106798. 10.1016/j.compstruc.2022.106798

14.

DengWNguyenKTMedjaherKGoguCMorioJ. Rotor Dynamics Informed Deep Learning for Detection, Identification, and Localization of Shaft Crack and Unbalance Defects. Adv Eng Inform (2023) 58:102128. 10.1016/j.aei.2023.102128

15.

ZhangMGuoTZhangGLiuZXuW. Physics-Informed Deep Learning for Structural Vibration Identification and Its Application on a Benchmark Structure. Philos Trans R Soc A (2024) 382(2264):20220400. 10.1098/rsta.2022.0400

16.

WangSTengYPerdikarisP. Understanding and Mitigating Gradient Flow Pathologies in Physics-Informed Neural Networks. SIAM J Scientific Comput (2021) 43(5):3055–81. 10.1137/20m1318043

17.

HeKZhangXRenSSunJ. Delving Deep Into Rectifiers: Surpassing Human-Level Performance on Imagenet Classification. arXiv (2015) 1026–34. CoRR abs/1502.01852. 10.1109/ICCV.2015.123

18.

KingmaDPBaJ. Adam: A Method for Stochastic Optimization. In: 3rd International Conference on Learning Representations, ICLR 2015; May 7–9, 2015; San Diego, CA (2015). Conference Track Proceedings.

19.

HaghighatEBekarACMadenciEJuanesR. Deep Learning for Solution and Inversion of Structural Mechanics and Vibrations (2021). arXiv:2105.09477.

20.

InmanD. Engineering Vibrations. 3rd edn.Upper Saddle River, New Jersey: Pearson Education, Inc. (2008).

21.

BatyHBatyL. Solving Differential Equations Using Physics Informed Deep Learning: A Hand-On Tutorial With Benchmark Tests (2023). Available from: https://hal.science/hal-04002928v2,hal-04002928v2 (Accessed April 18, 2023).

22.

ZhongYDeyBChakrabortyA. Symplectic Ode-Net: Learning Hamiltonian Dynamics With Control. In: Proc. of the 8th International Conference on Learning Representations (ICLR 2020); April 26–30, 2020; Ethiopia.

Summary

Keywords

PINNs, PDE, MATLAB, automatic differentiation, vibrations

Citation

Chatterjee T, Friswell MI, Adhikari S and Khodaparast HH (2024) MATLAB Implementation of Physics Informed Deep Neural Networks for Forward and Inverse Structural Vibration Problems. Aerosp. Res. Commun. 2:13194. doi: 10.3389/arc.2024.13194

Received

26 April 2024

Accepted

25 July 2024

Published

13 August 2024

Volume

2 - 2024

Updates

Copyright

© 2024 Chatterjee, Friswell, Adhikari and Khodaparast.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tanmoy Chatterjee, t.chatterjee@surrey.ac.uk

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.