Abstract

Over recent years, the rise of Generative Artificial Intelligence (Gen AI) has led to the emergence of numerous tools for the creation of visual and textual content. This technological advancement, supported by increasingly robust data management systems and targeted research efforts in this direction, has driven the continuous refinement of Machine Learning and Deep Learning models. As a result, Gen AI tools have demonstrated ever-increasing performance, leading to their rapid adoption in various sectors, including the Cultural and Creative Industries (CCI). Here, they are being integrated into value-creation pipelines, potentially impacting both production processes and career prospects for creative professionals. As a consequence, critical questions have emerged about the widespread use of Gen AI, related to the nature of their generative capabilities, often encapsulated under the umbrella term of “computational creativity,” which has begun to challenge the traditional conception of creativity as an intrinsic and exclusive capacity of human beings, with implications across all fields in which human creativity is central, such as the design disciplines. In light of the current scenario, the presented research aims to discuss the application of Gen AI tools for image, text, and video generation in fashion design. The analysis draws on the results of a didactic laboratory entitled Artificial A(i)rchive, which involved design practitioners from the Master’s degree course in Design for the Fashion System at Politecnico di Milano. Within this workshop, the adoption of Gen AI was investigated, examining how AI was integrated at various stages of the design process and highlighting both the potential and shortcomings of applying Gen AI to support the activities of fashion designers. The article thus aims to contribute to the discussion and identification of collaborative models between fashion designers and AI, while situating the findings within a broader reflection on emerging creative practices and their potential implications.

Introduction

The rise of generative AI and its application in cultural and creative industries

Since McCullogs and Pitts’s theorisation in 1943 (1), Artificial Intelligence (AI) has crossed a long path of evolution that has been reflected in the collective imaginary by alternative pick of attention and down of disregard. These summer and winter phases are common to innovation developments and correspond to the growth and decrease of expectations that certain technological advancements might directly and dramatically impact people’s daily lives. The development of “Chat GPT2” by Open AI in 2019 marked the shift from AI as unexpressed potential, still confined in specialists’ context and only tacitly embedded into users’ applications -such as search engines, to an AI as a real “General Purpose Technology” (GPT). That is to say, finally a technology accessible to common users simply interacting through natural language able to perform tasks apparently producing original contents. The start of this new summer of “Generative AI,” as it’s been currently labelled, accelerated the process of its development through increasing investments and the consequent concurrent releases of ever-advanced and performative AI tools. Together with a widespread “try-on” attitude in the general public, this phenomenon revamped the questioning about the potential negative impacts of technology, which has accompanied humanity since the rise of the machine age during the first Industrial Revolution. Gen AI has, in fact, a very peculiar nature which relies on its multipurpose orientation and its capacity to go beyond strength enhancement, efficiency and velocity in calculation and predictions, as it was for previous technological paradigms. The AI models known before the Generative one, inspired by the functioning of brains -though the so-called “neural-network models”-, were already able to somehow replicate cognitive human capacity for decision-making based on learning and consequent predictions -the so-called “machine learning”- by processing a large amount of data -the so-called “big data”-. These systems, already embedded in our daily lives, from autonomous hoovers and vehicles to internet search engines, were still lacking a key attribute of humans: creativity (Taulli, 2019). Gen AI systems, such as ChatGPT, challenged this assumption by appearing (1) to receive commands by understanding the meaning of natural language and (2) to respond by performing tasks producing original/new content. These particular attributes immediately revamped the debate about their potential impact on job markets and those creative professions still untouched by automation, up to the regrowth of the obscure apocalyptic fear that machines will ultimately substitute humans and decree their extinction (Floridi, 2022).

In 2013, an internationally acknowledged study by two Oxford researchers, Carl B. Frey and Michael A. Osborne, examined the susceptibility of jobs to computerisation, looking at 702 job profiles in the United States. According to their study, 47% of the total American employment was at risk of computerisation and could have been replaced by a machine, they estimated, in one or two decades (Frey and Osborne, 2017). These results were substantially confirmed in subsequent studies, but almost all analyses still converged on the conviction that professional positions involving high levels of creativity and manual dexterity were less likely to become obsolescent (World Economic Forum, 2016). The rise of Gen AI is now challenging this conviction not only pumping up again the scientific debate but also reinforced by the diffusion of Gen AI applications in Cultural and Creative Industries (CCI), from movie production to theatre, from literature to fashion (European Commission, 2022).

Being fashion a field closely connected to cultural trends and social interaction, it’s been one of the most experimental towards AI but with applications which often appear unclear in terms of impacts and benefits. As a matter of fact, in a recent study, 73% of fashion executives said Gen AI will be a priority for their businesses, but just 28% have tried using it in creative processes for design and product development, that is to say, within their core business (The State of Fashion 2024, 2023).

The observation of current trends in fashion pushes the urgency to better understand which applications can be useful to the industry, what their real implications are, and when and how they can be adopted.

And ultimately, or prior to that, if Gen AI is “creative” as we mean with this term, and consequently if it has the real potential to substitute designers in their tasks.

Can AI be creative? Drawing on the current debate

The debate on the nature of creativity and creative thinking has been following the whole evolution of humanities and cognitive sciences with different implications and offering various models for understanding human creativity (Sawyer, 2012). The convergence between cognitive science and computer science, since the first theorising of neural network models back in the middle of the last Century, has also quickly linked many of the concepts originally belonging to psychology and neurosciences to the world of computers. The recent development of Gen AI and its appearance to be able to produce creative outputs has revamped this crossroad, connected with the many implications which machines increasingly resembling humans’ creativity seem to have. The key point of dispute appears to focus on whether computers are creative or not and whether they can substitute humans in creative tasks through these new Gen AI tools (Hutson et al., 2024a). Cognitive scientist Margaret Boden, among the most authoritative voices approaching the topic, has been deeply investigating this polarisation, bringing out a secular point of view which stays away from the goal of demonstrating that computers are or are not creative, but instead which analyses the attributes making the results of “computational creativity” resembling those of human creativity. Additionally, she uses these attributes and her deep knowledge about the advancement of computational creativity to better explain the human creative process, still one of the fuzziest frontiers of neurosciences (Boden, 2004). As a matter of fact, the cross-wiring of languages between computer sciences and brain studies has always instilled ambiguity in the understanding of the real nature and functioning of automated computational processes and human cognitive processes. In fact, if the result or output of the two can be or appear similar, this doesn’t mean the process to produce those outputs is the same (Floridi and Nobre, 2024). This is also reflected in the current debate on the supposed creativity of Gen AI, which suggests that its outputs are the result of a creative process comparable to the human one.

As introduced earlier, Gen AI systems appear, in fact, to be creative as (1) they receive commands by understanding the meaning of natural language and (2) responding by producing original/new content. But as philosopher Luciano Floridi clearly pointed out, there is a substantial difference between the process followed by Gen AI and the one of human creativity to produce their outputs. The human mind encodes its concepts through natural language, which is built by associating “meanings” to words, i.e., the semantic, and then combining them into language through codified rules, i.e., the syntactic. For humans, it is the semantic system, the meaning, that drives the syntactic construction of language. Gen AI models, instead, work only through a syntactic system: they are based on a very advanced statistical and provisional model that has been “pre-trained” through machine learning on processing literally billions of tokens (Floridi, 2023). These enormous datasets describing human knowledge through millions of sources enable them to associate words (in the case of Large Language Models -LLM- as for Chat GPT) or pixels of images (in the case of Diffusion Models -DM) based on statistical predictions. This means, with reference to assumption (1), that they do not really understand the “meaning” of natural language or of images, but they only associate and combine words or pixels of images by probability. This also implies that the output produced through this process is based on pieces of knowledge already existing, predictable somehow through combinations of what has already been created by humankind. That is to say, also, assumption (2) can be questionable, and even if the outputs produced by Gen AI may introduce original recombination and new associations, they leave doubts that this sophisticated statistical process can actually ever produce something radically new. Attached to these critical reflections, there are also several concerns related to the blurred understanding of the real functioning of Gen AI algorithms and processes, even to the same scientists who developed them, raising the quest for transparency and explainability (Samek et al., 2019). Therefore, as Floridi argues well, we currently do not have semantically competent and truly intelligent machines that understand things, care about them, and produce creative results that are meaningful to people and purposed at improving the quality of their lives. We only have sophisticated statistical and purely syntactic technologies that can produce apparently original and credible outputs by circumventing problems of meaning, relevance, comprehension, truth and impact (Floridi, 2023).

Consequently, the perception of AI as inherently creative is purely illusory; its capabilities merely simulate creative behaviours. What effectively transforms AI-generated results into artistic products is the strategy adopted to exploit this technology as a tool for achieving creativity (Marburger, 2024). But almost all scholars and practitioners, even the most enthusiastic about Gen AI, converge in asserting the need for keeping humans in the loop and looking at these applications as new collaborative tools.

Starting from Carl B. Frey and Michael Osborne in 2024, after the explosion of Gen AI, felt the need for a reappraisal of their study on automation and its impact on the future of jobs (Frey and Osborne, 2023). Their conclusion about the wave of Gen AI is that it will not lead to either automation or new industries but to the transformation of existing content-creating jobs. They argue that it can’t be considered an automation technology as it requires human contribution to perform its task in two key phases. On the one hand, for “prompting” Gen AI towards meaningful results, suggesting and driving those pathways of combination and associations produced by statistics and then selecting and refining the most promising results by purposing them to meaningful goals and impacts. On the other hand by bringing in that general and transversal knowledge of the context that can suggest those unexpected associations, linking seemingly unrelated ideas and domains and from which something truly new can derive (Mollick, 2024). Also, because one of the fears for unsupervised Gen AI is that if nothing fresh and radically unknown is ever introduced in the training system, it may end up progressively downgrading its predictive potential, exhausting the quality of information and data that can feed it.

In conclusion, as Gen AI’s role has been identified as a collaborator alongside humans rather than a substitute for their abilities, it is increasingly important to focus the debate and research on how to make Gen AI applications useful for creative jobs. This includes defining perimeter and limitations, diving into the grey area of contested ownership resulting from a human-AI collaborative process (Vishnu, 2024), as well as implementing collaborative paradigms where new professionals are trained to finalise it to meaningful outputs and positive impacts.

Generative AI tools in design processes: designer-AI collaboration

While the debate on the creativity of Gen AI is being nurtured and increasingly clarified, CCI, the most exposed to societal trends and topics under the spotlights for public opinion, are running to test ever-new applications. Among them, the fashion industry, with its future-oriented attitude and its mediatic inner nature, was on top of the early adopters. Already in 2020, the first experimental approaches to AI popped up in the business, and in Paris, the fashion house Acne Studios explored the possibilities of using it in its Men’s Fall/Winter 2020 collection in collaboration with artist Robbie Barrat. The quick spread of interest in Gen AI was then starting to be increasingly reflected in fashion, reaching a pick in 2023. That year, during the London Fashion Week, Moncler, together with Maison Meta, pioneering the use of Gen AI, and creative agency WeSayHi, proposed AI-generated visuals showcasing its latest collaborations with prestigious designers and brands such as Adidas Original, Pharrell Williams and Alicia Keys. In January 2023 also Valentino, an icon among Italian luxury prêt-à-porter brands, created through Gen AI an advertising campaign dedicated to the launch of its Essential line. The same year, Cyril Foiret, founder of Maison Meta, launched the first AI Fashion Week at Spring Studio in Brooklyn, New York, which received more than 350 submissions for participating in the competition. While this hype seems already to be diminishing, the examples show the current confused approach to this technology, which seems, in most cases, to be used more as a mediatic topic to attract audiences than a real new tool to be applied in the core business (Rizzi and Casciani, 2024).

Part of this unclear scenario on the potential and impacts of Gen AI in CCIs, and specifically in fashion, resides in its inner nature. In fact, their developers are still more focused on exploring the dimension of interaction with users and improving the quality of outputs through implementing the algorithmic models than on refining and specialising their applications. As a matter of fact experts in innovation theories are converging on considering technologies such as Chat-GPT, not only Generative Pre-trained Transformer but also GPTs meaning “General Purpose Technologies,” the ones having the potential to transform several if not all spheres of human activities (Brynjolfsson et al., 2023). This general and open nature is, on the one hand, the reason why they are broadly diffused and particularly accessible even to common users; on the other hand, the reason why they are still undeveloped with reference to possible useful applications they could find in specific domains. This is a typical condition of GPTs at the moment of their introduction along the history of science and technology, and therefore, while the acceptance of Gen AI from specific domains such as fashion proceeds and a process of professionalised adoption matures, its applications are now starting to show their real potential and impacts.

In light of this fluid scenario, a possible and desirable paradigm is emerging in CCI, which sees Gen AI tools being embedded into creative processes within a collaborative space, augmenting human capabilities in several tasks and phases. This perspective is aligned with many theorizations of the nature of creativity, which produces innovation that, for a few decades, has been acknowledged to be the result of a “collective construction” (Rosenberg, 1999). In particular the acceptance and adoption of a certain innovation is deeply connected with “domain communities” that start to recognise its benefits. Moreover it does evolve into producing ever more positive impacts as much as domain expert-users are accessing it and adopting it (Csikszentmihalyi, 1996). The way Gen AI seems to be currently integrated into CCI is consistent with this perspective, and it has the potential of becoming one of the agencies of the expert community that participate in design processes (Gruner and Csikszentmihalyi, 2019).

In conclusion, within the general concept of the social construction of creativity, a new “designer-AI” collaboration space is emerging, where innovation is the result of co-creation between individuals and where, now, the collaboration between creative professionals and Gen AI applications should be included (Atkinson and Barker, 2023). In light of this premise, it is urgent to better define the perimeter and modalities of this co-creation process and assess AI tools clearly, evaluating their potential and inherent biases.

Assessing generative AI tools for fashion design

Gen AI tools gained wide application in creative labours (Croitoru et al., 2023) due to their increasingly intuitive interfaces, which foster collaboration between humans and AI (Rapp et al., 2025), and their high technical performance in producing high-quality visuals that vary in style and composition (Oppenlaender, 2022).

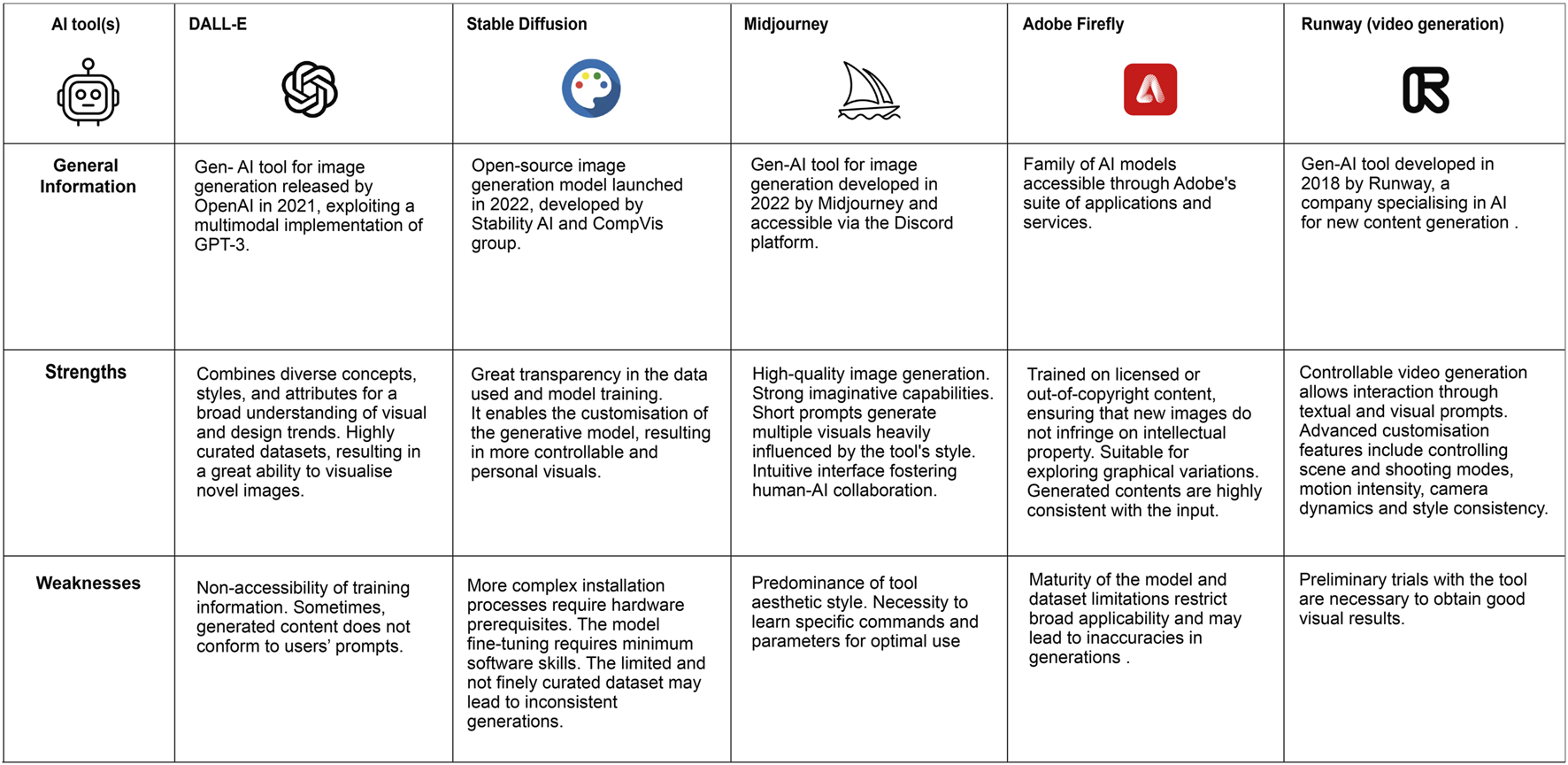

Within the fashion industry, there are numerous stages in the value chain where Gen AI can be integrated to bring value to the process. With a specific focus on product development, AiDLab, Adobe Firefly, Cala, Google AI, Midjourney, OpenAI, Raspberry.ai, Runway, Stable Diffusion, Threekit and Yoona.ai have emerged as some of the most widely used tools in fashion companies (The State of Fashion 2024, 2023). Among these, special attention is given to Gen AI tools for image and video creation, e.g., Midjourney and Runway, which can assist designers in developing moodboards, design concepts, visual renderings and virtual fashion shows. An overview of their features is presented in Figure 1, highlighting some of the strengths and weaknesses of their models (Fernberg et al., 2023; Jie, et al., 2023; Turchi et al., 2023; Bengesi et al., 2024; Hutson et al., 2024b; Ranscombe et al., 2024; Known limitations in Firefly, 2024; Runway, 2025).

FIGURE 1

Overview of generative AI tools for image and video creation.

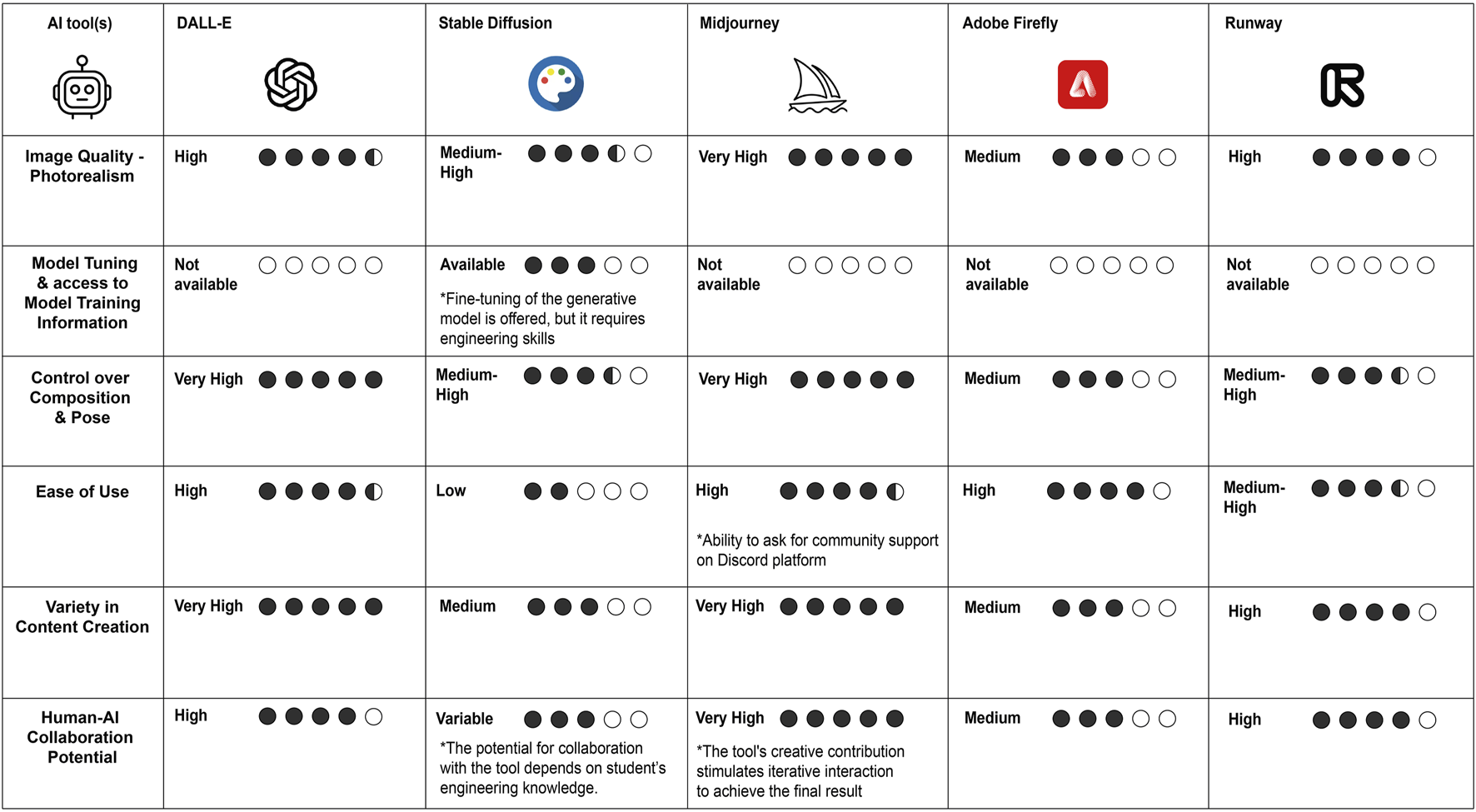

For the purpose of the didactic laboratory, a preliminary evaluation was conducted to systematically identify Gen AI tools aligned with the project objectives. This process examined how these tools could potentially support fashion design ideation and product development phases, based on parameters of “Image Quality-Photorealism,” “Model Tuning and Access to Model Training Information,” “Control over Composition and Pose,” “Ease of Use, Variety in Content Creation,” and “Human-AI Collaboration Potential.” The selection of these criteria aimed to evaluate the tools by considering their technical performance in output generation, the degree of control in adapting their use to project requirements, and general technical information on the AI model, framing their overall ability to stimulate critical thinking and creativity in the design process. This evaluation was informed by a literature review assessing their performance (Fernberg et al., 2023; Jie et al., 2023; Turchi et al., 2023; Bengesi et al., 2024; Hutson et al., 2024b; Ranscombe et al., 2024; Known limitations in Firefly, 2024; Runway, 2025), and conducted on a selection of tools available on the market: DALL-E, Stable Diffusion, Midjourney, Adobe Firefly, and Runway. Through the analysis of their characteristics, Midjourney and Runway were identified as the most compatible with the project objectives. The complete comparison and evaluation of the Gen-AI tools are provided in Figure 2.

FIGURE 2

Comparison and evaluation of Generative AI Tools for image and video creation.

In addition to the previously listed tools, Chat GPT 3.5 was included in the project framework. The tool, built on large language models, is characterised by its versatility and broad knowledge base.1 The expertise from various domains brought together in a single platform and accessible through conversational interactions allows designers to gather and refine the information iteratively, supporting the redefinition and flexible adaptation of design problems in alignment with the fluid nature of the design process (Hu et al., 2023).

Designer-AI collaboration experience: the artificial A(i)rchive laboratory

Methodological overview

The didactic laboratory Artificial A(i)rchive was developed as part of the MSc curricular programme to engage students in exploring the relationship between natural and computational creativity within the fashion design process. The laboratory took place from September to December 2023 and involved 76 design practitioners in the final year of the MSc in Design for the Fashion System at Politecnico di Milano. It was conducted as part of the regular curricular teaching activities within a course officially included in the MSc programme and structured according to the syllabus approved by the Programme Committee. The data analysed in this study were not gathered through additional research activities, such as interviews, participant observation, or surveys, but derived entirely from students’ regular coursework and deliverables. The researchers involved in the analysis and theoretical modelling of the results served as lecturers and teaching assistants within the laboratory and, in this role, assumed responsibility as data controllers for the educational materials, overseeing their collection, anonymisation, and interpretation in line with institutional data protection protocols, throughout both the didactic phase and the subsequent research activity. Only following the conclusion of the course the materials generated as outputs for the final student assessment were anonymised and examined. The educational experience provided a framework for observing how generative AI tools had been integrated into students’ creative workflows and for identifying broader patterns and reflections concerning their potential impact on fashion design-specific knowledge (Thoring et al., 2023).

Materials and process overview

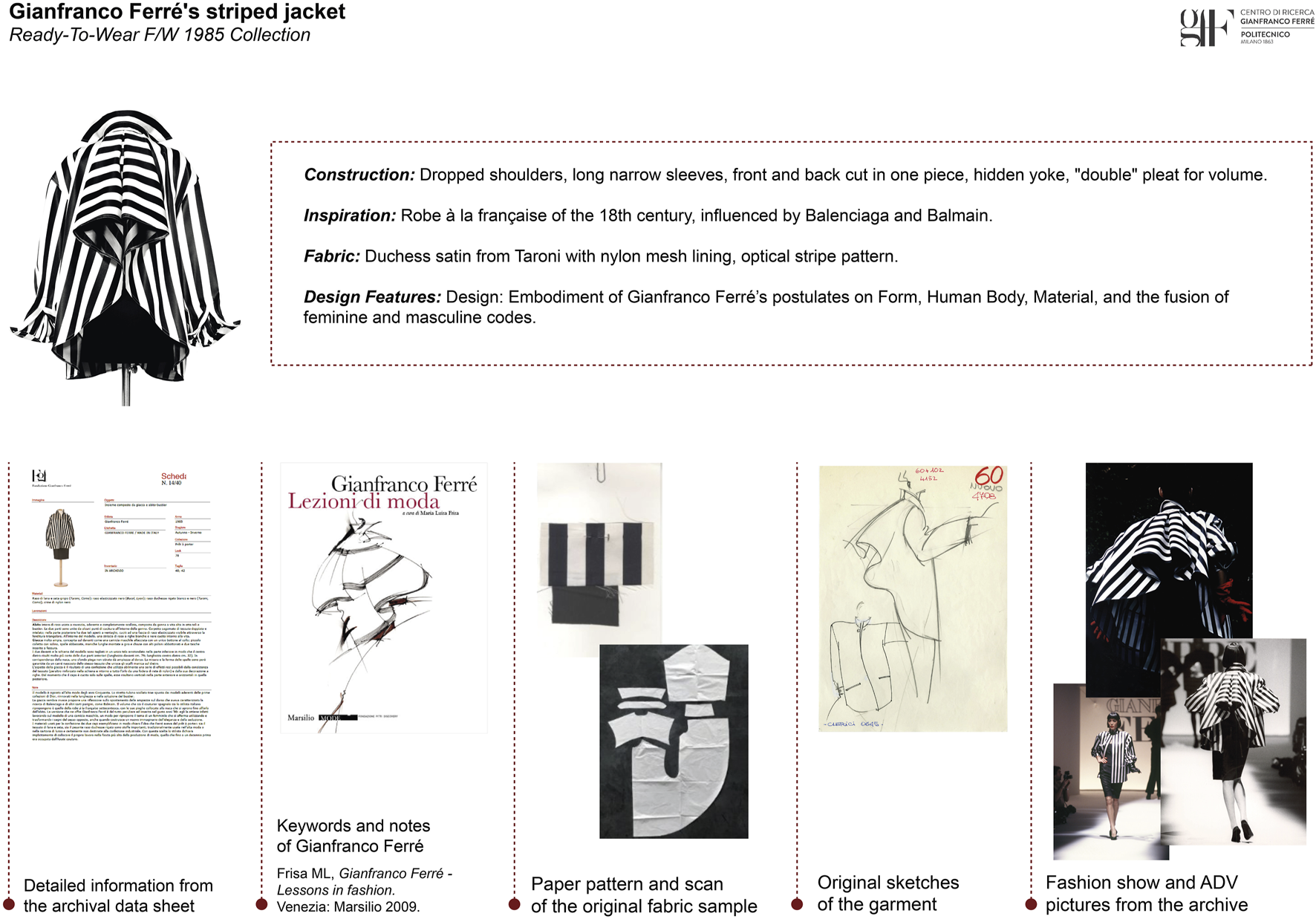

In order to explore designer-AI collaborative dynamics, students were organised into 10 teams and given the same brief of designing a capsule collection consisting of three outfits: a coat, a dress and a piece of choice, with the optional addition of accessories and jewellery to complete the look. Moreover, they were given the same inspirational brief: reinterpreting an iconic garment - the Striped Jacket (F/W – 1985) - belonging to the historical Archive of the Gianfranco Ferré Research Center.2 As initial inspiration, as well as inputs for use in generative AI tools, students were provided with a complete set of archival material and documentation related to the garment: photographs, reproductions of original sketches, paper patterns, technical sheets, material samples, and visual content from related fashion shows. Additionally, teams received keywords and texts drawn from Gianfranco Ferré’s original collection notes, which were used as text prompts during the design process. An overview of the material provided is shown in Figure 3.

FIGURE 3

Overview of archival material provided to students for the project.

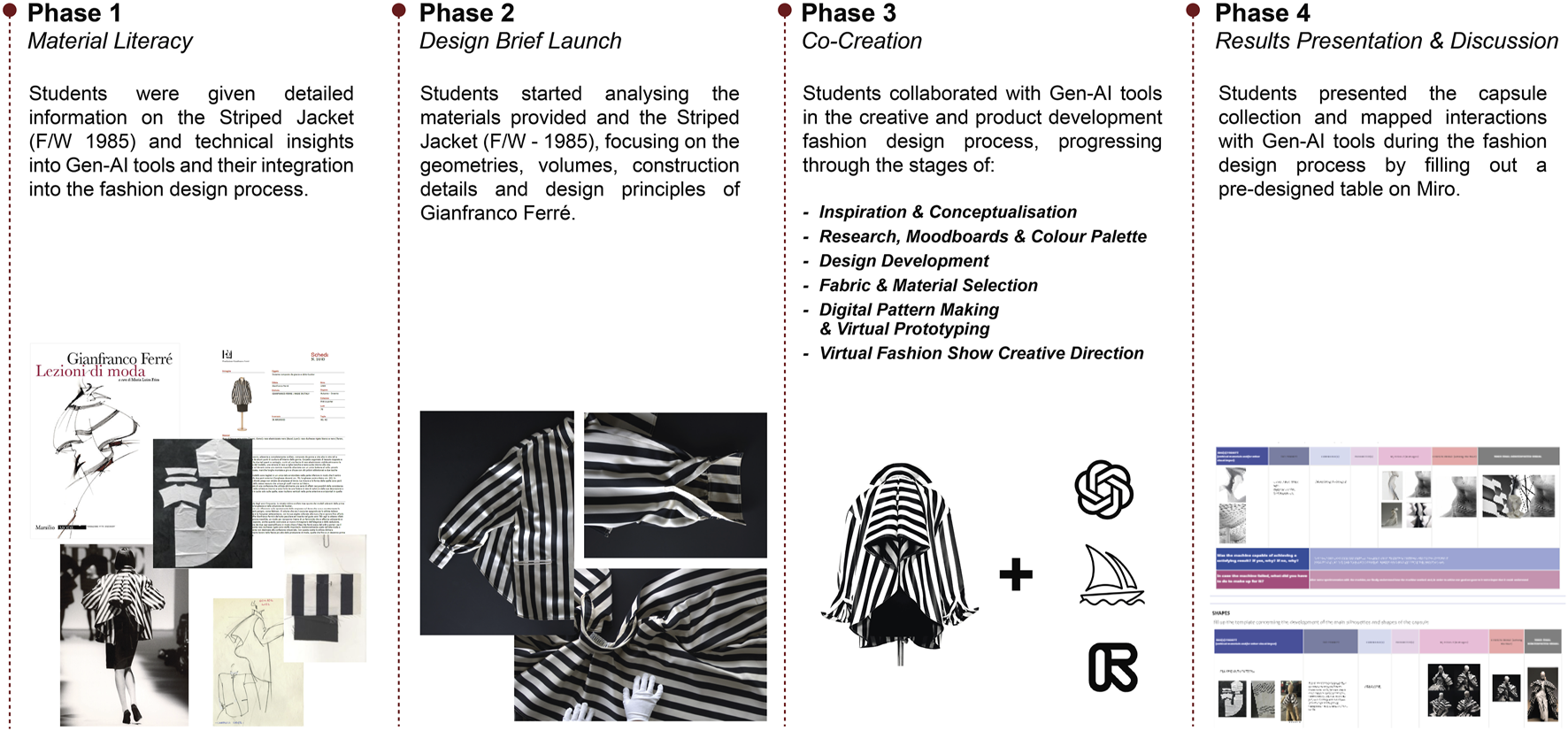

The decision to provide all teams with identical creative briefs and inspirational materials was made in order to better evaluate the dynamics of interaction between designers and AI tools. This allowed for a more consistent comparison of the students’ creative autonomy and of the impact of combining structured, codified inputs with their own original prompts, both visual and textual. The goal was to identify opportunities to enhance designers’ skills, as well as to surface emerging issues related to this new design synergy. The project proceeded through four main phases: “Material Literacy,” “Design Brief Launch,” “Co-Creation,” and “Presentation and Discussion of Results,” illustrated in Figure 4.

FIGURE 4

Overview of project phases.

Mapping the application of generative AI tools

This section will describe the previously introduced Phase 3 - “Co-Creation,” and Phase 04, “Presentation and Discussion of Results” (Figure 4), focusing on the co-creation path between designers and Gen AI for realising the capsule collection inspired by Gianfranco Ferré's designs. The collaborative dynamic between the actors was circumscribed within the fashion design creative and product development phases, addressing the creative briefing to specify inspirations, themes, shapes and details of the collection and then proceeding with their subsequent translation into technical drawings, selection of fabrics and components (Bertola et al., 2018). This was followed by digital pattern-making of the garment, its virtual prototyping and the design of virtual runway settings. In doing so, the students followed a partial fashion product development process, which cut out the initial “Line Planning and Research” phase, based on market segmentation, trend and consumer behaviour analyses, as well as the “Line Presentation and Marketing” and “Production Planning” phases, intended for marketing strategies and actual production of the collection (Senanayake, 2015).

Concerning the “Co-Creation” phase, the collaboration between designers and Gen AI followed a division of the design process into sequential steps. In the first stage, “Inspiration & Conceptualisation,” students were asked to define their concept for reinterpreting Gianfranco Ferré's Striped Jacket (F/W – 1985). The “Research, Moodboards & Color Palette” step was then approached, during which the identified collection concept was visually translated by creating moodboards containing first impressions of the shapes, materials, colours, geometries and further insights on garments design. This process led to the “Design Development,” in which the creative brief was translated into visual guidelines, made explicit by sketches and flat drawings. In the “Fabric and Material Selection,” the collection reached a further level of specification and technical comprehensiveness. Subsequently, in the “Digital Pattern Making & Virtual Prototyping” phase, the visualisations of the capsule collection were first translated into 2D technical drawings and paper patterns and then fitted to virtual avatars by verifying the three-dimensionality of the garments on the CLO3D software. This transition from two-dimensional visualisation to studying garment shapes, geometries and construction details in volumetric form was a fundamental step in the experimentation. The aim was to assess the Gen AI tool’s understanding of garment construction principles and verify whether AI-generated images could be rationalised and translated into feasible designs. The final phase, “Virtual Fashion Show Creative Direction,” had a twofold purpose. On the one hand, it aimed to develop a runway-setting proposal consistent with the original concept and inspiration of the Gianfranco Ferré Archive. On the other hand, it provided fashion design students with an opportunity to experiment with the Runway tool for video generation.

Following these steps, students dive into the fashion design process, drawing inspiration from AI’s contributions to define the “Design Concepts” (Senanayake, 2015), which were subsequently concretised into actual designs and their virtual visualisation and verification of wearability.

In the “Presentation and Discussion of Results” phase, students were asked to share significant information on how Gen AI tools had been employed during the collaboration. This information was noted by each group throughout the process in a Miro chart, consisting of a series of columns relating to specific features of the development of the fashion capsule collection: “Thematic Inspiration,” “Colors,” “Shapes,” “Graphics,” “Embroideries/Embellishments,” “Final Treatments,” and “Virtual Runway Settings.” For each of the listed items, students were asked to report information on: “Image Prompts,” i.e., images provided by students to feed the AI tools during interaction; these could be archival if collected directly from the Gianfranco Ferrrè Archive; self-produced, in the case of drawings and images produced by students; third-party images, if retrieved from external parties; or AI-generated images derived from previous interactions. The second category concerned “Textual Prompts,” i.e., texts used to interact with Gen AI tools, which could also come directly from archival materials, be self-produced, taken from third parties or originated from other AI tools. The third category concerned “Commands,” which are used to generate new content and modify Gen AI tool settings. The fourth focused on “Parameters,” i.e., functions to intervene in specific aspects of image generation on Midjourney. The last three categories were those that carried the “Results” of the generation, the “Selected Result” and their “Final Version,” which could include further interventions and adjustments by the designer. In addition to this more technical information, the students were also requested to report whether a satisfactory result had emerged from the interaction and, if so, how it had been achieved and, if not, what were the reasons for rejecting the results obtained. The collected data were used to reconstruct the development process of each group; the subsequent mapping led to the identification of different paradigms of designer-Gen AI collaboration applicable in the design process.

Outcomes overview of the fashion design students’ collaboration with AI

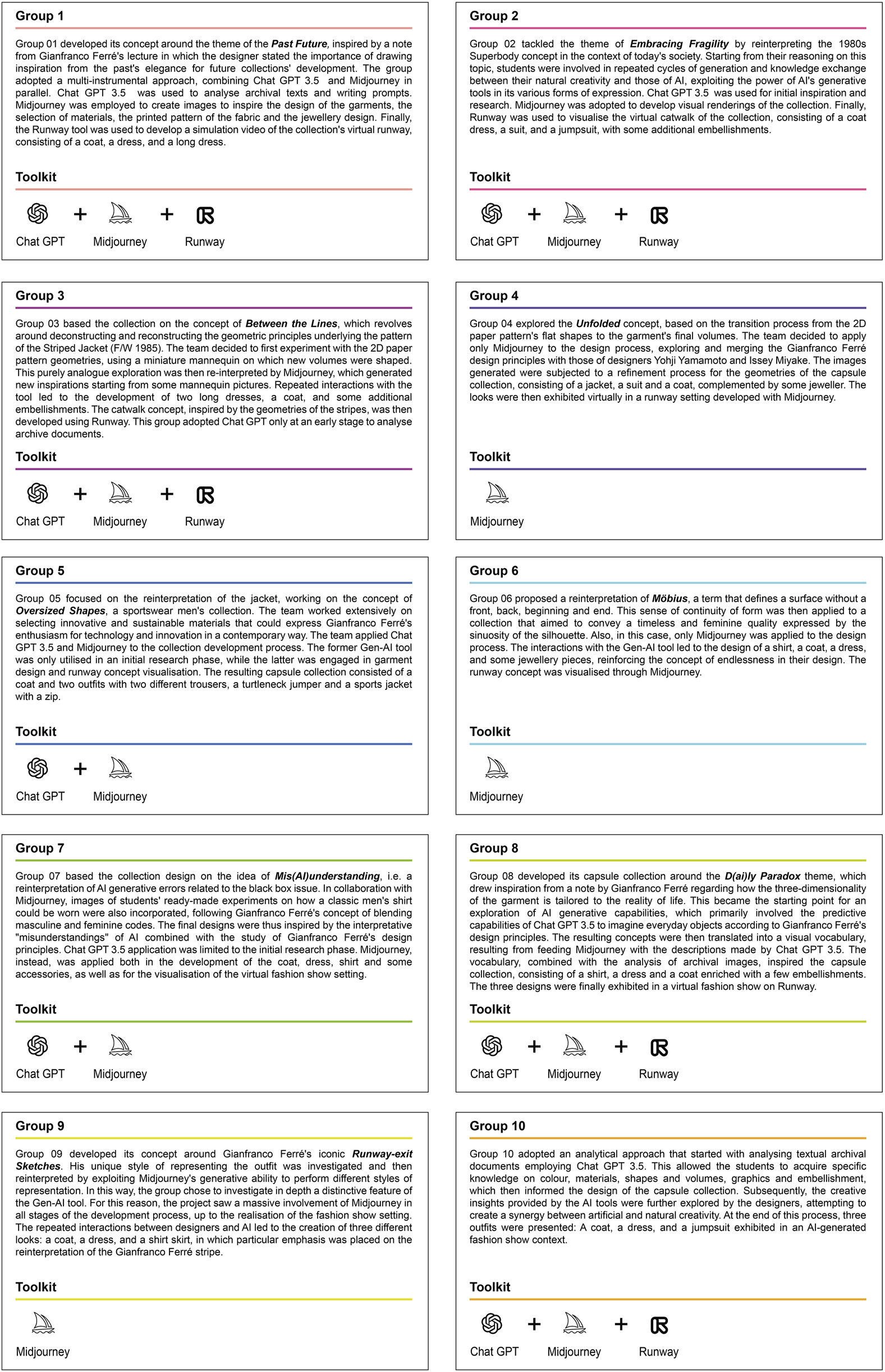

This section will introduce the ten output projects derived from the Artificial A(i)rchive laboratory. As mentioned in the project description, the students were asked to develop a capsule collection inspired by Gianfranco Ferré’s Striped Jacket (F/W 1985), consisting of a coat, a dress and a garment of the team’s choice. In Figure 5, a schematic representation of each project developed, outlining which Gen AI tools were integrated by the teams in the design process is provided.

FIGURE 5

Project descriptions highlighting the application of Generative AI tools in the fashion design process.

Discussion and results

Creation of the design process analysis matrices

This section discusses the designer-AI collaborative dynamics performed throughout the laboratory. An initial analysis of group projects focusing on the integration of Gen AI tools in the design process revealed the following: all ten groups collaborated with at least one Gen AI tool to develop the collection. Groups 04, 06 and 09 only incorporated Midjourney into their creative process. Groups 05 and 07 opted for dual use of Gen AI, combining Chat GPT 3.5 with Midjourney. Groups 01, 02, 03, 08 and 10 chose to exploit the totality of the tools, exploring the capabilities of Runway designing the virtual fashion show. Despite the different approaches displayed by the groups in incorporating Gen AI into the design process, none of the ten projects reported the application of an AI tool for “Digital Pattern Making & Virtual Prototyping.”

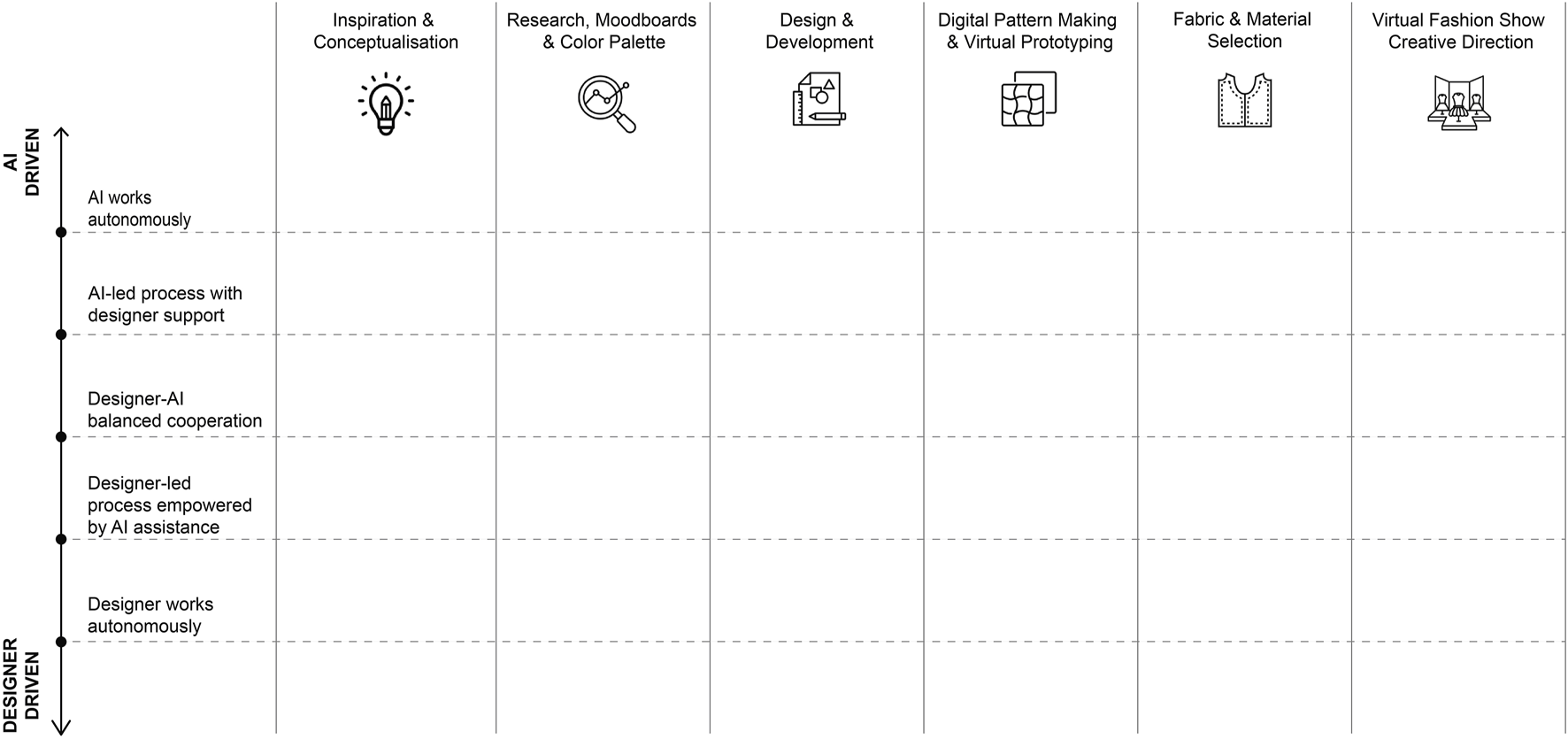

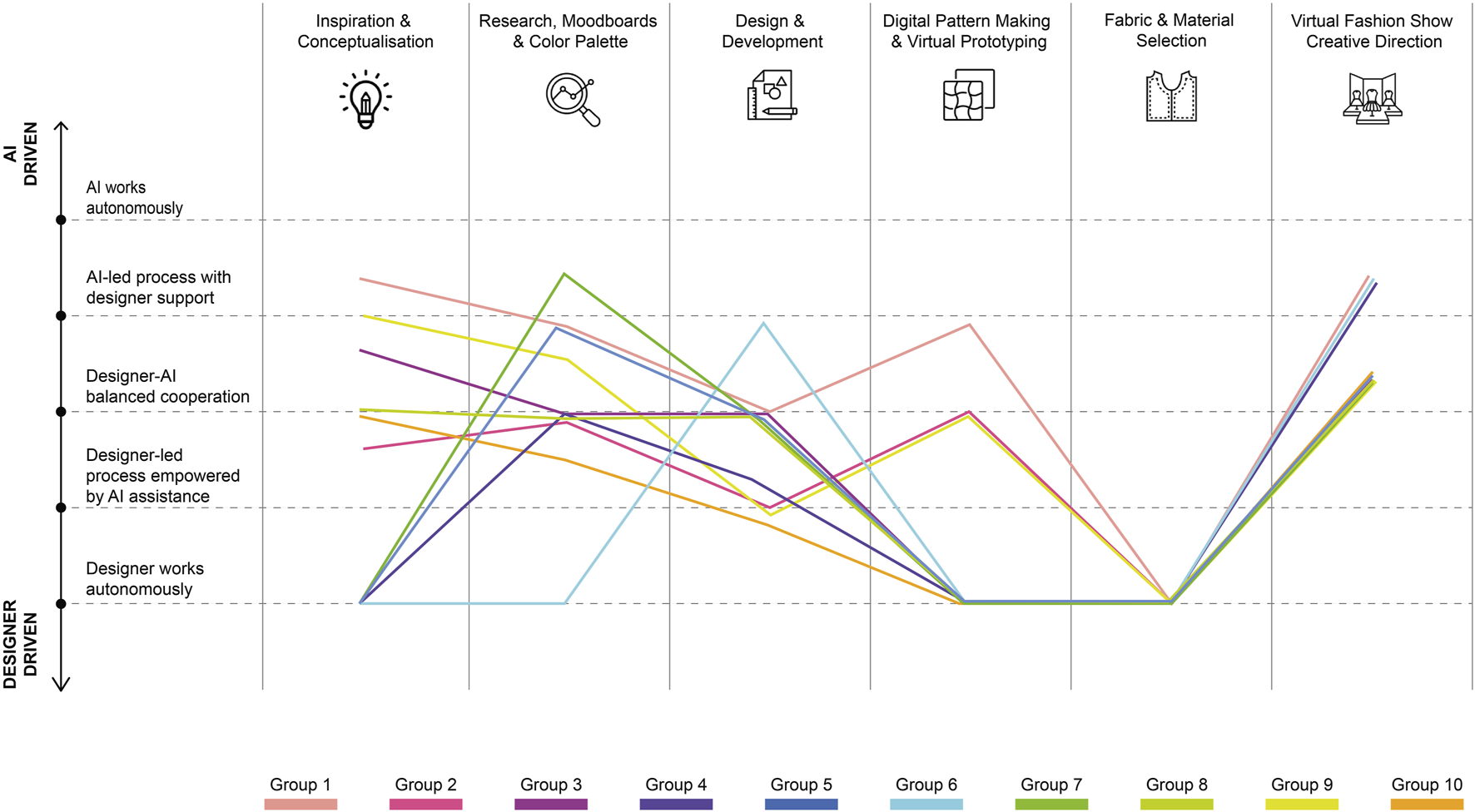

To deepen the understanding of the collaborative dynamics between fashion design practitioners and AI, we constructed two matrices (Figures 6, 7) evaluating the results of the ten groups, referring to the analyses proposed by other scholars (Kantosalo and Takala, 2020; Kurosu, 2021; De Peuter et al., 2023; Shi et al., 2023; Zhang et al., 2023) on human-AI co-creation. The first matrix aimed to investigate the interplay between AI and designer participation in the stages of the design process, while the second aimed to define the nature of AI and designer action, respectively.

FIGURE 6

Analysis matrix 1 - evaluation of generative AI and designer contributions within the fashion design process.

FIGURE 7

Analysis matrix 2 — Descriptions of generative AI tools, AI contribution, and designers’ approach in the fashion design process.

To assess the nature of the co-creation process, we decided to embrace the perspective on computational creativity proposed by Wu et al. (2021): “The ability for humans and AI to co-live and co-create by playing to each other’s strengths to achieve more. AI is a complement to human intelligence, and it consolidates wisdom from all achievements of humanity, making collaboration across time and space possible” (Kurosu, 2021, p. 172). In the proposed vision, the role of Gen AI tools as a supplement to natural human creativity is emphasised, which aligns with the current scenario of the “Artificial A(i)rchive” laboratory, where large-scale generative model-based tools were studied to test their capacity to support the designer’s efforts in defining the creative brief and developing the collection. Concerning this, Wu et al. (2021) introduced the “Human-AI Co-Creation Model” (Kurosu, 2021), a framework in which the human-AI collaboration is defined as a circular process marked by six main AI capabilities in assisting the designer: “Perceive,” “Think,” “Express,” “Collaborate,” “Build” and “Test.” Similarly, our approach examined how AI serves as a tool to augment human perception and creativity, facilitating improved opportunities for content communication, idea visualisation, and discussion. This aligns with five of the capabilities outlined by Wu et al. (2021) and Kurosu (2021). However, we diverged from the “Collaborate” perspective, which denotes the primary information exchange dynamics phase. During the laboratory, we observed that collaborative dynamics are not confined to a specific developmental phase but are instead pervasive throughout the process. In addition to the previously discussed reference, we drew inspiration from the study by Zhang et al. (2023). The authors, using a reworking of Wu et al. (2021) model (Kurosu, 2021), decided to focus on the degree of involvement of stakeholders in the co-creation dynamics. According to their interpretation, it is possible to move from a level with little AI involvement, in which most tasks are attributed to human agency, to an intermediate level in which AI and humans share reciprocal information for the development of the project, and lastly to a third level in which AI’s contribution exceeds that of humans in the performance of tasks, assuming a semi-autonomous role.

The concept of process leadership was also discussed by Kantosalo and Takala (2020); concerning the contributions within the co-creation dynamic, the authors developed the dichotomy between “Human initiative” and “Computational initiative” to identify the actor primarily responsible for the action within the process. Shi et al. (2023) similarly addressed this issue, using the concept of “agency” to describe the predominant agent in the co-creation process (Shi et al., 2023). The theme of agency just discussed was placed at the heart of the first analysis matrix, aiming at evaluating the extent of AI tools’ engagement across all stages of the fashion design process to determine whether the contribution was primarily attributable to the machine or the human side. These considerations led to formulating a rating scale based on five degrees of agency. At the initial level, (1) “Designer works autonomously,” the complete independence of designers in relation to AI tools is established. On this basis, we observe an increasing level of trust and closer cooperation with AI as we progress through the levels (2) “Designer-Led Process empowered by AI Assistance”; (3) “Designer -AI balanced cooperation”; (4) “AI-Led Process with Designer Support,” and, finally, achieving the highest level of integration of Gen AI tools (5) “AI Works Autonomously,” in which the machine is completely independent in pursuing tasks. Within this framework, the degree of agency was associated with two factors: first, the extent to which the human and AI tools involved in the process contribute to the design activity, and second, how their performances mutually influence each other, considering that the dominant actor typically exerts substantial influence over the actions of others (Shi et al., 2023). This first matrix enabled us to examine the results to define which agent was in control of the collaboration, which can result in a designer-driven approach, where the designer takes the lead in the design activities, or an AI-driven approach, where computational agents can automate design activities with a high degree of freedom.

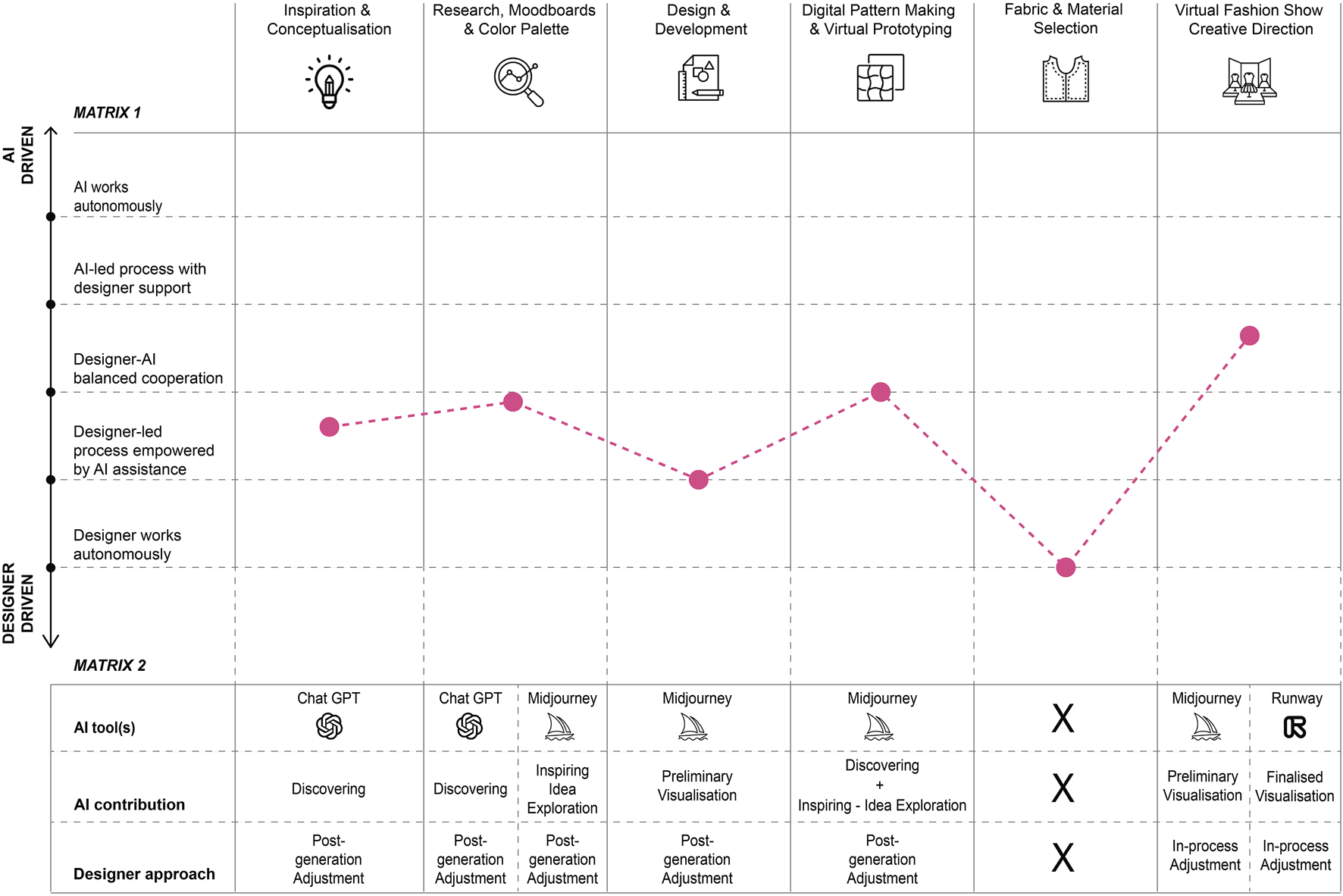

The second evaluation matrix was created to monitor the involvement of AI tools in the process alongside the designer’s actions. The first component of this analysis model refers to (A) “AI Tools,” providing information on the application of (a-i) Midjourney, (a-ii) Chat GPT 3.5 and (a-iii) Runway in each of the design phases, either applied individually, in combination or, on some occasions, not included in the process.

The second category explored (B) “AI contribution,” was inspired by Shi et al. (2023), focusing on the identification of the modalities in which AI can assist the designer through the capabilities of “Discovering,” “Visualising,” and “Creating”, which led to the definition of five different categories (b-i) “Discovering,” (b-ii) “Inspiring- Idea exploration,” (b-iii) “Inspiring- Idea consolidation,” (b-iv) “Preliminary Visualisation” and (b-v) “Finalised Visualisation.” The first category proposed by the authors refers to the support offered by AI in studying and providing insights into users’ preferences through analysing their data. In our case, this capability has been translated into the ability of Generative Large Language Models to discover and provide amplified knowledge due to the vastness of the data and information on which they have been trained. In our case, (b-i) “Discovering” identifies the application of AI to conduct an in-depth analysis of visual or textual content to detect meaningful insights. This process provides designers with valuable information, influencing the creative process at an early decision-making stage. “Inspiring” is the second concept we identified, which is based on a re-interpretation of the “Visualising” concept proposed by Shi et al. (2023). This describes the AI’s ability to provide targeted and serendipitous inspirations to designers and develop moodboards, visual elements and concept boards, thus stimulating divergent and convergent thinking to broaden the creative horizon. Based on our observations during the laboratory, we decided to further divide this category into (b-ii) “Inspiring - Idea exploration,” where AI assists designers in discovering new ideas and pushing the boundaries of creativity; this offers innovative perspectives that go beyond conventional parameters, encouraging out-of-the-box thinking and the generation of original concepts; and (b-iii) “Inspiring- Idea consolidation,” where AI supports the designer in exploring the references provided, but within certain constraints set by the designer. In this case, the AI assists the designer in consolidating and refining existing ideas, providing input and suggestions that align with the designer’s creative vision. The “Creating” feature (Shi et al., 2023) inspired the third evaluation element of (B) “AI contribution.” According to Shi et al. (2023), AI can assist designers in externalising and improving the presentation of their ideas (Shi et al., 2023). AI can generate low-fidelity rough drafts as a starting point for the subsequent detailed design process or refine designer ideas via repetitive designer-AI interactions. In our interpretation, this concept assumes the form of a “Visualisation” activity, divisible into (b-iv) “Preliminary Visualisation” when Gen AI supports the designer in creating a preliminary visualisation, serving as a starting point for the further development of the design, subsequently redefined by designers according to their creative vision; and (b-v) “Finalised Visualisation,” in which Gen AI is responsible for the refinement of the design proposals according to established guidelines. In this scenario, the tool generates finalised outputs ready to be integrated into the project, ensuring their alignment with the objectives and design principles in the design brief.

Observation of the students’ workflow revealed that the (b-i) “Discovering” category is oriented towards the application of AI for analytical purposes, starting from the designers’ input. The input can be fed to the machine in the form of original archival materials, such as images and textual descriptions; archival materials reinterpreted by designers, in cases where these have undergone a process of coding or human editing, showing an original contribution by the designer; alternatively, they may be presented as investigative inquiries regarding a particular subject, in cases where the Gen AI tool is used to explore more general issues. The elements of (b-ii) “Inspiring- Idea exploration” and (b-iii) “Inspiring- Idea consolidation” can be united under the umbrella of AI applied for “Enhancing Design Process.” However, AI can be diversified depending on whether the Gen AI tool is adopted with a “curious approach” towards exploring its creative capabilities or is employed with a specific goal defined by the designer. The last categories, (b-iv) “Preliminary Visualisation” and (b-v) “Finalised Visualisation,” instead focus on the translation of concepts into actual visual representations. The differentiation relates to whether the designer accepts the generated result as it is or whether they feel it requires further refinement. It should be noted that the different design phases within Artificial A(i)rchive inherently require different applications of the Gen AI tool. This progression goes from a more analytical use to an exploratory approach and finally to the final stages of the development process, which require a more visual application of AI.

Moving on to discussing the third element contributing to constructing the second analysis matrix, which looks at (C) “Designer’s Approach” towards AI. In this case, the definition of designer archetypical behaviour was not based on a literature review. Still, it resulted from observing the student action patterns enacted during the laboratory. Five types of attitudes emerged from this analysis: (c-i) “Blind acceptance,” which defines the designer’s unconditional acceptance of the AI-generated results, thus avoiding in-depth examination or reprocessing. This passive approach can hinder critical evaluation and overlook potential improvements, relying solely on AI-generated content without considering its alignment with project goals or accuracy. The second archetype is (c-ii) “Validation,” in which the designer validates the output generated by the AI through its verification, assessing the accuracy, relevance and consistency with the project’s objectives and guidelines. In both of the first two approaches, there is no adjustment process during generation, which is the case with the third category, (c-iii) “In-process adjustment,” where the designer intervenes during the AI generation process to make real-time adjustments to improve the final output. The fourth categorisation (c-iv), “Post-generation adjustments,” in contrast, describes the designer’s attitude in refining the AI result to better align it with the project’s specific requirements after its generation. It should be noted that this approach may implicitly incorporate a previous (c-iii) “In-process adjustment.” Finally, the last category (c-v), “Rejection of the result,” defines the designer’s refusal of the IA-generated result, considering it insufficient to capture the creative intent or meet the project’s requirements.

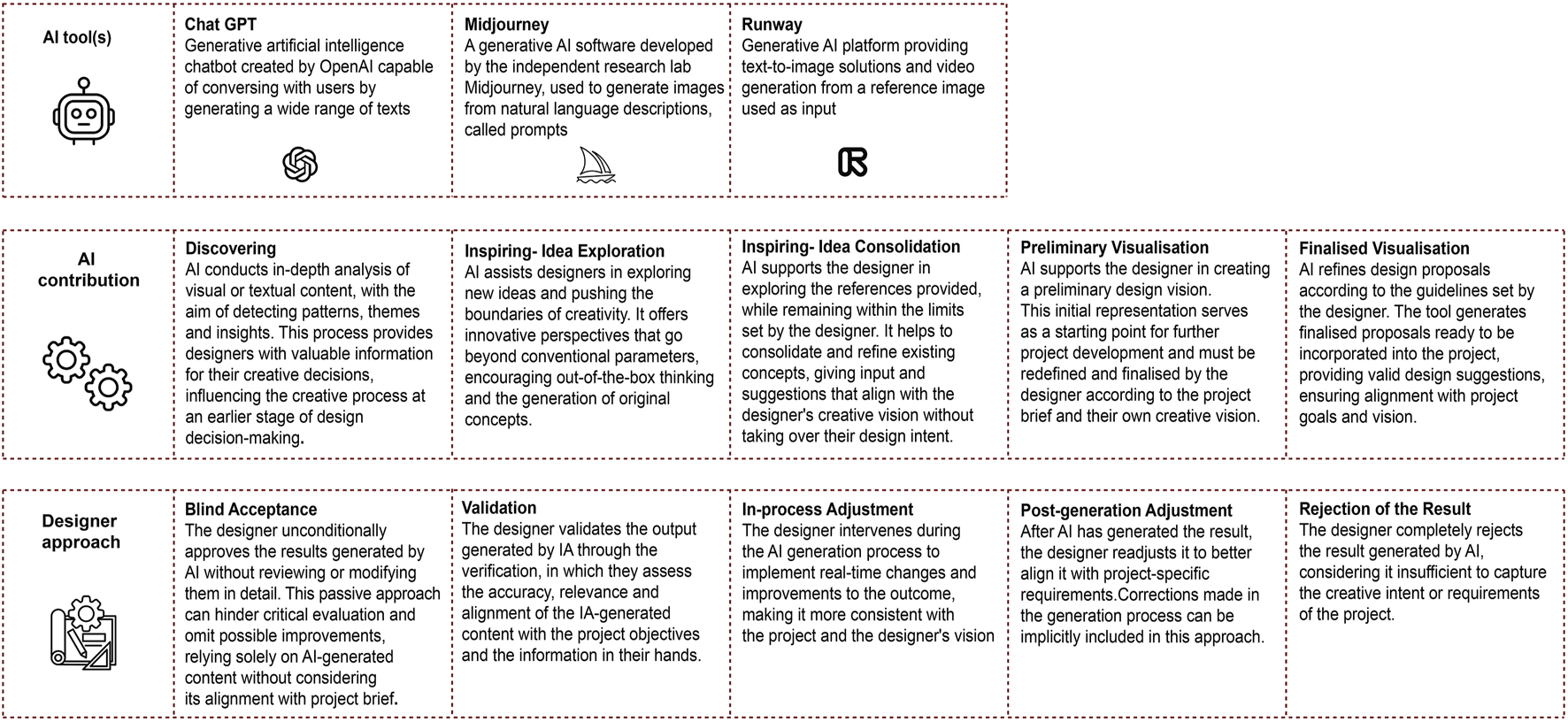

An example of the application of the two matrices is presented in Figure 8, while in Figure 9, an overall visualisation of the analysis of the ten projects considering the first matrix can be observed.

FIGURE 8

Example of fashion design process codification by application of the two matrices.

FIGURE 9

Visualisation of the Designer-AI Co-creation process for the ten groups, based on the first analysis matrix.

Analysis of results and identification of designer-AI interaction dynamics

Having already introduced the two matrices, this section will focus on analysing the co-creation dynamics with the Gen AI tool experienced by the students. To this end, we introduce Rhodes’ (1961) “4 P’s of Creativity” model (Oppenlaender, 2022) based on the codification of creative behaviour into “Person,” “Process,” “Press,” and “Product.” The “Person” examines the physical and mental dimensions of the creative individual, relating them to creative expression. The “Process” addresses the analysis of the problem-solving mechanisms that lead the “Person” to develop a creative idea. The “Press” defines the relationship between the “Person” and the environment, investigating how this affects the “Person” creativity. Finally, “Product” refers to translating the creative idea into its tangible form.

For the experiment conducted, we considered some reinterpretations of Rhodes’ model. By describing creativity as an individual phenomenon, the model was not directly applicable to the investigation of co-creative dynamics (Kantosalo and Takala, 2020) nor collaboration between humans and AI. Glăveanu (2013) revisited Rhodes’ model by discussing creativity as a process occurring between the individual and the surrounding environment, thus introducing a “distributed creativity” perspective. Within this discussion, the “4 P’s of Creativity” are updated into a “5 A’s framework,” which includes the “Actor,” the “Action,” the “Artifact”, the “Audience,” and the “Affordance.” The “Actor” is an extended interpretation of the “Person” that no longer focuses on the individual trait but emphasises the reciprocal influence between the individual and society. The “Action” perspective is closely related to the “Process”, although mental processes are read about the context in which the action occurs (Glăveanu, 2013). The dimension of “Artefact” similarly extends the meaning of “Product” to the contextual dimension. Finally, “Press” is discussed by Glăveanu (2013) in a twofold interpretation, which includes both the social dimension of creative ideas expressed by “Audience” and the material one addressed by “Affordance” (Glăveanu, 2013).

A subsequent revision of Rohdes’ “4 P’s of Creativity” was proposed by Jordanous (2016) to include the dimensions of computational creativity in the model. In this scenario, the “Person” was reimagined as the “Producer,” which included the software, robot, or computational agent, its developer, and the one who interacts with the tools for creative purposes. The “Process” was extended to the computational dimension, conveying within it the algorithmic processing and the human-machine and machine-environment interactions that lead to creative productions. The “Product,” in the context of computational creativity, is often read as evidence of the machine’s creativity, thus risking obscuring the importance of the other “P’s” that contribute equally to the creative process. The “Press” manifested in this context acts in two directions. Indeed, the environment is read as the agent influencing creators in their production while being responsible for the recognition and qualification of the artwork as such (Jordanous, 2016).

The analysis of the Artificial A(i)rchive laboratory also incorporated the perspective proposed by Kantosalo and Takala (2020), in which the three previously discussed models of creativity were merged into the framework of the “Five C’s for Human-Computer Co-Creativity,” based on “Collective,” “Collaboration,” “Contributions,” “Community” and “Context,” which together lead to the definition of the “Communication Framework”. The “Collective” represents the creative collaboration unit involving at least two collaborators, one artificial and one human. Although it is a single entity, within the “Collective,” the information exchanges engage the collaborators individually (Kantosalo and Takala, 2020). “Collaboration” defines the individual creative processes of collaborators and their interaction. It is articulated through a series of actions that frame the dynamics of co-creation, the initiation of which can be human-initiated, machine-initiated, or jointly initiated. The “Contribution” refers to the information, materials, commands, inputs, and feedback exchanged by collaborators during the “Collaboration,” leading and impacting the final contribution. The “Community” expresses creativity’s social and material nature, articulated by the network of artists, critics, audiences, curators, collectors, and institutions interfacing with the “Collective.” The “Context,” which can be assimilated to Csikszentmihalyi (1996) concept of “Domain” (Csikszentmihalyi, 2014), defines the surrounding environment that acts on the “Collective” in its most material expression, encompassing within it the set of materials of an artistic and normative nature with which the “Collective” has interacted during the “Collaboration.” The “Communication Framework” represents the joining element of the model, bringing its components together and activating a process of meta-reflection and control over co-creation based on a dynamic of negotiation between the parties involved in the framework (Kantosalo and Takala, 2020).

Given the above discussion, we have declined the agents involved in the Artificial A(i)rchive laboratory, referring to the “Five C’s for Human-Computer Co-Creativity” (Kantosalo and Takala, 2020) framework. The observed co-creation dynamics can be defined as follows: The “Collective” was formed by the students divided into groups, and the Gen AI tools were involved in the project development. The most elementary configuration saw the sole adoption of Midjourney (Groups 04, 06 and 09); in some cases, two computational collaborators were employed, with the integration of Chat GPT 3.5 to support the creative process (Groups 05 and 07), furthermore articulated “Collective” were formed, in which Runway was also included (Groups 01, 02, 03, 08 and 10). The “Collaboration” was established by sharing the design objective and guidelines contained in the project Brief. The Gen AI creative “Contributions” can be expressed in generating images, texts, and videos to inspire designer creativity and enhance the visualisation of ideas. Designers, instead, contributed to the process mainly through action initiation, fashion design-specific knowledge, and the general supervision and coordination of the project’s direction. The “Contributions” consisted of commands, feedback and textual or visual materials used as input for the Gen AI tools. These materials may have been taken from Gianfranco Ferré's archive, external sources, self-generated content, or previous designer-AI co-creation interactions. The “Community” formed within the laboratory was personified by the lecturers as project supervisors and classmates who shared similar work experiences. The “Context” in this scenario comprised the framework within which the “Artificial A(i)rchive” experience was outlined, based on the guidelines and objectives summarised in the project brief that embodied the normative nature of the context. The “Communication Framework” was represented by each student’s inner meta-reflection, which occurred during the various co-creation sessions with the Gen AI tools, to ensure the results were consistent with the project and after the laboratory to provide a critical observation of the experienced designer-AI collaboration.

Definition of designer–AI collaboration archetypes: exploration of potential and critical issues

The interpretation of the Artificial A(i)rchive results through the application of the two matrices allowed us to identify the co-creation dynamics common to the groups participating in the laboratory. Mapping the integration of Gen AI tools in the fashion design process also led to a preliminary reflection on the alignment between the capabilities of the tested instruments and the application domain requirements. This consistency was analysed through the levels of “Artefact Knowledge,” “Design Intuition” and “Design Language,” which delineate how knowledge in design is structured, acquired and transferred (Thoring et al., 2023).

The resulting discussion can contribute to the debate around human-AI co-creation and the intersection of natural and artificial creativity, specifically in fashion design.

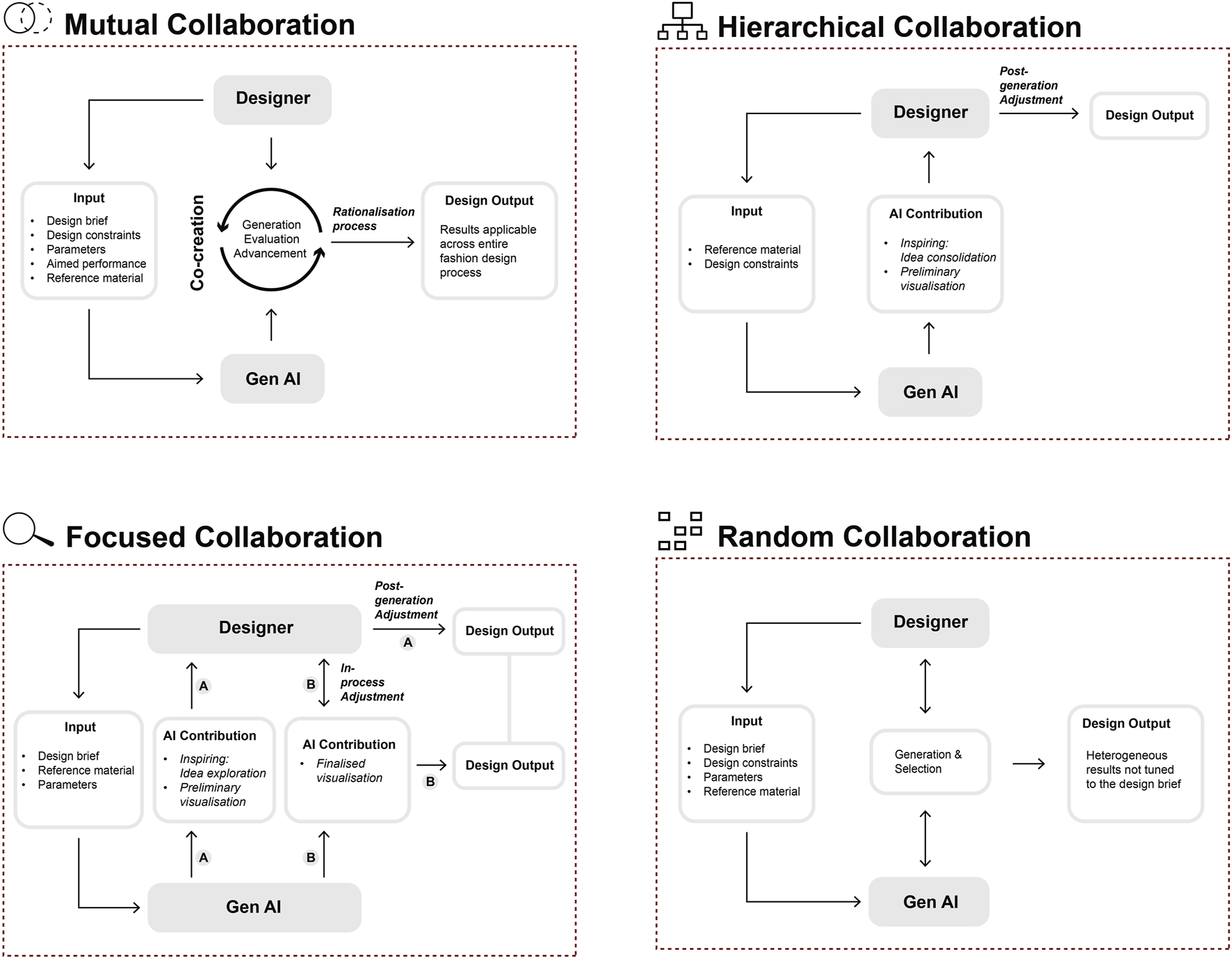

What follows is an interpretation of the collaborative patterns that emerged from our experimentation. What emerged are four distinct co-creation archetypes (Figure 10), framed based on the “Collaboration” dynamics, defined by the collaborator’s “Contributions” observed within the ten “Collectives” that were established within the “Context” of the Artificial A(i)rchive laboratory. We propose definitions of “Hierarchical collaboration,” “Focused collaboration,” “Random collaboration,” and “Mutual collaboration.” The latter model implies an optimal collaborative dynamic, which, although it did not emerge directly from the mapping of results, suggests a trajectory to aspire to for the emergence of co-creation strategies to optimise design processes within the fashion industry.

FIGURE 10

Designer-AI collaboration archetypes.

The “Hierarchical collaboration” discusses a co-creation model in which AI facilitates in-depth analysis and extraction of information from the material shared by the designer. In this framework, Gen AI’s main “Contribution” is manifested through the assistance provided by the designer in analysing and identifying potential suggestions, providing valuable insights for design decision-making. Accordingly, the designer’s “Contribution” is manifested in controlling the decision-making process and directing the operation of Gen AI according to their design vision. Consequently, the application of Gen AI to creative expressions is limited and focuses primarily on content exploration within a well-defined framework and the refinement of specific design aspects previously developed by the designer. Within this framework, we observed that AI mainly contributed to the process being applied for (b-iii) “Inspiring - Idea consolidation” and for (b-iv) “Preliminary Visualisation” with a prevalent designer attitude based on (c-iii) “In-process adjustments,” (c-iv) “Post-generation adjustments,” and (c-v) “Rejection of the result.” What emerged is a non-widespread adoption of AI during the process, creating an unbalanced participation between human and computational collaborators building the “Collective.” A clear hierarchy in the co-creation dynamics is defined, where the designer holds the reins of the creative and development process.

In “Focused Collaboration,” AI acts as a catalyst for creative expression, enabling the designer to come up with innovative ideas and visualisations. Its “Contribution” focuses on generating inspirational content based on the designer’s input, boosting creativity and pushing boundaries. The designer exploits AI-generated suggestions to explore new concepts, which enriches the variety of design results. The designer’s “Contribution” is expressed in the strategic explanation of Gen AI capabilities and the control exercised over the process, mainly concentrated ed in the initial phase, establishing a design direction, and in the final phase of experimentation, where the AI-generated results are subjected to a fine-tuning and rationalisation and procedure. In this case, Gen AI is mainly involved in “Inspiring - Idea exploration,” “Preliminary Visualisation,” and “Finalised Visualisation,” and the designer acts through (c-ii) “Validation”; (c-iii) “In-process adjustments” and (c-iv) “Post-generation adjustments.” Similar to the first archetype discussed, the participation of AI and designers is not equally divided within the “Collective.” However, there is a more confident and curious attitude towards AI capabilities than the first “Hierarchical collaboration” model.

In the third archetype of “Random collaboration,” there is a mismatch between the capabilities of the Gen AI and the ability of the designer to coordinate the creative process, resulting in a pattern where the “Collaborations” of the AI and designers fail to be clearly defined, following a randomised allocation of tasks. The “Contributions” result in a fragmented input and feedback mechanism, resulting in heterogeneous experimentation and fuzzy design. The lack of control over the process leads the designer to inconsistent use of tools and to overestimate their generative capabilities, resulting in the designer predominantly adopting a (c-i) “Blind acceptance” attitude. In contrast to the previously discussed archetypes, AI contributes widely and transversally to the creative process. However, even though both human and computational collaborators are highly involved in the design phases, the final “Contribution” generated by the “Collective” lacks the coherence and adherence to the specifications given by the “Context” for it to be entirely accepted by the “Community.”

The “Mutual collaboration” archetype represents an ideal co-creation framework in which Gen AI supports the designer at every stage of the design process, facilitating a seamless workflow. AI “Contribution” supports material analysis and inspiration research, stimulating designers’ divergent and convergent thinking. The AI tools also assist in the “Digital Pattern Making & Virtual Prototyping” phase. The “Communication Framework” facilitates the improvement of AI efficiency. Indeed, the computational collaborator is empowered to continuously re-adapt its model by learning from the designer’s actions and feedback, thus offering fine-tuned outputs corresponding to the designer’s requests. The designer’s “Contribution” is expressed in the overall supervision of the process, in which AI capabilities are fully exploited to enhance the design results; hence, the predominant attitudes are (c-ii) “Validation” of the AI-produced results and (c-iii) “In-process adjustment” during the collaborative dynamics. Within this model, the project objectives are shared equally within the “Collective”. The framework of “Mutual Collaboration” implies a constant attitude of adjustment of AI models, built on the exchange of “Contributions” between collaborators, as also Artificial A(i)rchive discussed by Grabe (2022).

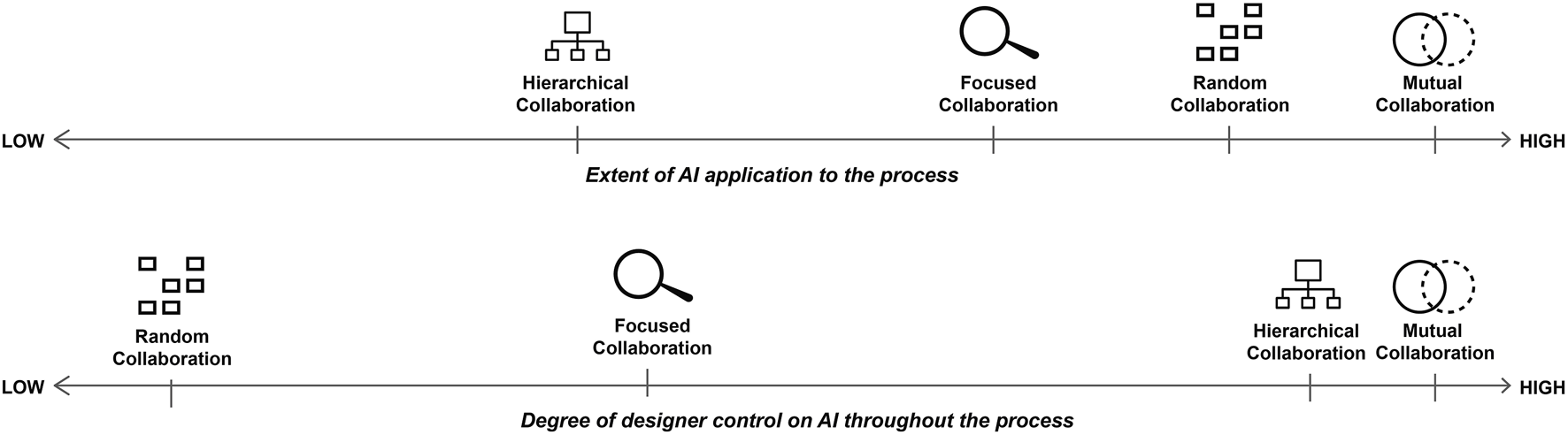

About the theme of agency, we then evaluated the archetypes based on their “Extent of AI application to the process.” What emerges here is that a higher application of Gen AI tools is attributable to the “Mutual collaboration” followed by the “Random collaboration” models, even though in these two frameworks, the pervasion of the Gen AI tools to the process is radically different in nature. On the axis follow “Focused Collaboration” and “Hierarchical Collaboration”, which rank last due to the designers’ conscious willingness to limit the scope of AI.

Also related to the attribution of leadership of the actors in the process, a second reading was proposed based on the “Degree of designer control over AI throughout the process”. In this instance, “Mutual Collaboration” remains highly ranked due to its nature as an ideal model for inspiration, while we can observe an opposite trend in the positioning of the other three frameworks. Indeed, “Hierarchical Collaboration” embodies a high degree of control of AI, which gradually diminishes in “Focused Collaboration” down to a poor level of control in “Random Collaboration.” A visualisation of the understanding of designer-AI collaboration archetypes in this regard is proposed in Figure 11.

FIGURE 11

Positioning of designer–AI collaboration archetypes based on the extent of AI application and the degree of designer control throughout the process.

Conclusion

Reflections on the Artificial A(i)rchive laboratory

The Artificial A(i)rchive experience aimed to address the contemporary issue of integrating Gen AI into the creative and product development processes of fashion design. Thus, exploring the application of Gen AI in the context of the “pro-activity/professional creativity” discussed by Kaufman and Beghetto (2009), which refers to creativity that requires a certain degree of expertise, mastery of tools and social recognition of its results (Ivcevic, 2024), within the specific application domain of fashion. The revolution we are experiencing in the creative sphere, which is also impacting the design sector, represents a real transformation in media creation, which can be read as a natural evolutionary trend of methodologies and techniques reflecting the industry’s technological progress (Manovich, 2023). Similar to how designers have moved from the pencil to digital representation and modelling techniques with the introduction of CAD software, now they are approaching Gen AI, which results from a remarkable acceleration in Deep Learning capabilities over the last two decades (Thoring et al., 2023). This progressive transition is reflected in the shift of the creative production paradigm from manual representation to digital simulation and finally to the prediction of results (Manovich, 2023), enabled by the capabilities of Large Language Generative Models. The Gen AI tools resulting from these models are currently the focus of research on their integration into design processes for the proposition of new collaboration paradigms (Kantosalo and Takala, 2020; Kurosu, 2021; De Peuter, et al., 2023; Shi et al., 2023). These elements formed the basis for the Artificial A(i)rchive laboratory analysis, which focused on the collaboration between fashion design students and three AI tools: Midjourney (a text-to-image and image-to-image generation tool), Chat GPT 3.5 (a chatbot that simulates human reasoning), and Runway (a text-to-video and image-to-video generation tool) for developing a capsule collection inspired by Gianfranco Ferré's striped jacket (F/W - 1985). This allowed us to test a hybridised process between humans and AI agents, in which the latter assumed the role of tools for “augmented creativity” (Vinchon et al., 2023) within a human-AI co-creation framework.

The development of two analysis matrices based on the study of designer-AI collaboration models (Kantosalo and Takala, 2020; Kurosu, 2021; De Peuter, et al., 2023; Shi et al., 2023) enabled an analysis of the results derived from the integration of the three different Gen AI tools in the fashion design creative and product development process. The mapping of the collaboration dynamics deployed by the students led to the definition of four different collaboration dynamics: “Hierarchical Collaboration,” “Focused Collaboration,” “Casual Collaboration,” and “Mutual Collaboration,” reflective of various levels of “Application of AI to the process” and “Degree of designer control on AI throughout the process.”

Overall, the results that emerged from the Artificial A(i)rchive laboratory led us to reflect on three different levels of design knowledge (Thoring et al., 2023): “Design Language,” i.e., the degree of design expertise communicated through specific terminology or a technical visual language; “Design Intuition,” i.e., the implicit knowledge required for the conceptualisation of a design; and “Artefact knowledge,” i.e., the translation of the design features in tangible output. In light of this framework, our experimentation revealed that the Gen AI tools involved in the process lack the “Design Language” of the fashion industry. Consequently, their “Design Intuition” could not be processed into “Artefact Knowledge” capable of reflecting garment construction principles and technical features. Although Gen AI provided its contribution for “Discovery” in the conceptualisation and research phase, “Inspiration” for the stimulation of divergent and convergent thinking, and “Visualisation” for the presentation of design proposals and their refinement, a gap was highlighted in the selected Gen AI tools related to sector-specific expertise. Indeed, the “Design Development” and “Digital Pattern Making & Virtual Prototyping” phases required the technical intervention of the fashion design students to translate the generated material into viable design solutions. However, it should be considered how some experimental tools are attempting to cover this gap (Kularatne et al., 2019; Guo et al., 2023).

A further conclusion that emerged was that the design complexity expressed by Gianfranco Ferré’s principles of “Form,” “Human Body,” and “Material” (Ferré and Frisa, 2009) could not be interpreted and codified by Gen AI, further underlining their inability to encode structures, volumes and geometries enclosed in the garment construction. Therefore, we can assert that the specific knowledge of the fashion designer remains the essential driving force of the design process, thus validating the scenario of “Collective Intelligence” based on a balanced exchange of knowledge between humans and machines according to the degree of “interconnectedness, diversity, hierarchy and critical culture” (Peeters et al., 2021, p. 223), leading to industry optimisation (Lee, 2022). The resulting rebalancing of human and AI strengths can be expressed in a redefinition of fashion industry workflows for the achievement of a seamless “AI-assisted design process” (Choi et al., 2023) in which AI can already be integrated from “line planning and research” (Senanayake, 2015) to the prototype construction. Although this advanced level of co-creation has not yet been concretised, the impact of Gen AI is beginning to resonate strongly within the fashion domain. The BoF-McKinsey State of Fashion 2024 report revealed that 73% of fashion industry executives are ready to integrate this technology into the supply chain by 2024. However, only 28% will use it in the creative processes of product design development (The State of Fashion 2024, 2023). Indeed, concerns about the creative application of AI remain high and, as discussed by Campero et al. (2022), and the lack of a unified evaluation tool that assesses the actual benefit of the collective intelligence produced by human-computer synergy is lacking, thus preventing an overall assessment of the benefits imported by AI (Campero et al., 2022). In our view, AI evaluation perspectives applied to design should be linked to a “Human-Centered AI-Co-Creation paradigm” (Zhang et al., 2023), which can assess and address the ethical implications of these tools. One of the most critical challenges in this regard consists of ensuring the transparency and explainability of AI tools through the development of interpretable models and decision-making reports that can validate the ethical conduct of the model (Sira, 2023; Shneiderman, 2021). This outlook aligns with the EU’s AI legal framework, approved in 2024, which aims to regulate AI applications across various sectors while recognising the development opportunities offered by large generative models. The document promotes a more ethical and sustainable integration of AI in the creative domain by requiring systems and deployers to trace the origin of generated content, mandating transparency in AI models to ensure copyright compliance, and prompting deployers to disclose when AI has been used in content creation. (European Parliament & Council of the European Union, 2024). Although this regulatory framework addresses the adoption of AI at a systemic level, some of its principles were actively integrated into the Artificial A(i)rchive laboratory, aiming to raise awareness among future designers about exploiting the potential of technology while also considering its responsible use. In particular, the experience focused on transparency regarding AI usage throughout the design process, requiring students to track all interactions with Gen AI. This practice also helped ensure that human agency remained central in decision-making. As a result, it became evident that human judgment continues to play a central role in shaping AI “creativity,” reinforcing its function as a tool that supports rather than replaces human skills (Vinchon et al., 2023). Other aspects of the regulation, which require more technical or programming intervention, could not be directly applied but were addressed as topics of discussion during the laboratory, prompting students to reflect on these broader implications critically.

Limitations and Future Research

The Artificial A(i)rchive laboratory conducted with the students in the second year of the Master’s Degree in Design for the Fashion System at Politecnico di Milano enabled to test the integration of three different Gen AI tools (Midjourney, Chat GPT 3.5, Runway) within fashion design creative and product development process. Although the results obtained from the collaboration between students and Gen AI have contributed to the discussion on the co-creation dynamics between natural and computational creativity in the design domain, some aspects of the study require attention to discuss limitations and future research perspectives. In the first place, it is important to emphasise that the interpretations derived from the qualitative analysis presented in this study provide only a partial assessment of the application of Gen AI in the fashion domain. The work carried out by students may not fully capture the collaborative dynamics nor the effective use of Gen AI tools. Furthermore, the proposed codification of the co-creation archetypes: “Hierarchical Collaboration,” “Focused Collaboration,” “Random Collaboration,” and “Mutual Collaboration,” derived from the qualitative analysis and grounded in a literature review on designer-AI collaborative models, is subject to potential bias and may be influenced by the authors’ perspectives and methodological choices. Consequently, it should be noted that the co-creation paradigms proposed in this article offer an overview limited to this specific research. Their applicability to other contexts should be validated through further experimentation.

To address the identified limitations and further explore the integration of Gen AI tools in fashion, a revised edition of the Artificial A(i)rchive Laboratory could focus on key criticalities emerging from the discussion.

First, investigating the gap between domain-specific and general-purpose Gen AI tools would provide valuable research insights. Comparative testing of generic versus industry-specific AI tools could further assess their adaptability to fashion requirements and evaluate their practical “Design Intuition” knowledge (Thoring et al., 2023). This limitation was evident in our study, where the inability of the selected Gen AI applications to recognise the specific technical characteristics of garment construction required design practitioners to translate the Midjourney-generated design into a 2D digital paper pattern to assess their wearability on a virtual avatar. Integrating fashion-specific Gen AI tools could enable participants to co-define garment technical requirements directly through the application, reducing the need for subsequent human intervention and thus suggesting revised modelling of the co-creation dynamics discussed in this research. Furthermore, this targeted focus could help identify the specific requirements of Gen AI tools in supporting the fashion design process, such as ensuring a clear and structured relationship between input and output (Kim, 2024).

A second aspect that could be addressed is the in-depth investigation of designer-AI interaction modes and tool controllability throughout the process, with particular attention to prompt construction. Indeed, as pointed out by Turchi et al. (2023), the complexity of prompt writing and the unclear impact that each word can have on the generative process conflict with the users’ intention to control specific design details. In that process, the unpredictable nature of the Gen AI model leads to the generation of what is termed “unexpected random results” (Turchi et al., 2023). A specific focus in this direction could contribute to investigating how the lack of control over the generation process leads to user frustration and resistance to AI integration, as well as analysing the factors that favour and inhibit collaboration between designers and AI.

In conclusion, this study did not address the ethical concerns associated with the integration of Gen AI into the design process, particularly issues related to accountability, ownership of co-created content (Rezwana and Maher, 2023), and copyright protection (Härkönen, 2020). Nor did it deeply examine the broader implications of the widespread use of Gen AI in creative content generation, such as the reinforcement of biases, the repetition of ideas and styles (Herm et al., 2022), or the alignment of Gen AI tools with emerging regulatory frameworks. These issues, while highly relevant, fall outside the scope of the present investigation and are proposed as directions for future research. Furthermore, it is worth noting that the reflections presented in this study are strictly related to the described didactic context and, therefore, should be understood as exploratory insights that emerge from an educational experience rather than as generalisable conclusions.

Statements

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

The study was conducted using internal legal and ethical guidelines provided by Politecnico di Milano for research involving the results of didactic activities. In line with these guidelines, the study falls within the framework of secondary research, as it involved the analysis of existing data generated during a regular curricular activity formally included in the MSc programme at Politecnico di Milano. The course was delivered according to a syllabus approved by the Programme Committee, following standard teaching methodologies and modes of interaction. No additional activities related to the research goals, such as direct interviews, participant observations or targeted data collection, were conducted with students during the course. Regarding the generative AI tools employed in the laboratory, only commercially available solutions were used, not in beta-testing, and previously validated in both academic and professional fashion contexts. The analysis process of the participants’ outcomes began only after the course had concluded and was based on outputs officially submitted by students for assessment purposes. All data were fully anonymised and analysed in aggregated form, thus avoiding using personal, biometric, or sensitive information. Furthermore, no individual student content, including creative or design outputs, was published or included in the article; such materials were used exclusively to inform broader interpretative models. According to institutional data protection policies and second-level privacy information issued by Politecnico di Milano, students are informed, via enrolment procedures and institutional platforms, that data generated during teaching activities may be used in anonymised and/or aggregated form for purposes such as educational quality monitoring, internal evaluation, and scientific research, in line with Articles 5(1)(b) and 89(1) of Regulation (EU) 2016/679 (GDPR). Students accept these institutional data practices by enrolling, including appropriate safeguards to protect individual rights and data confidentiality and ensure responsible and transparent data use across teaching and research contexts.

Author contributions

Both authors jointly contributed to the development of the methodology, the structure of the article, and the revision of the submitted version. GR was responsible for the subsection “Assessing Generative AI Tools for Fashion Design” within the “Introduction,” and for drafting the sections “Designer–AI Collaboration Experiment: The Artificial A(i)rchive Laboratory” (including “Methodological overview,” “Materials and process overview,” “Mapping the application of generative AI tools,” and “Outcomes overview of the fashion design students’ collaboration with AI”), “Discussion and Results” (including “Creation of the design process analysis matrices,” “Analysis of results and identification of designer–AI interaction dynamics,” and “Definition of designer-AI collaboration archetypes: exploration of potential and critical issues”), and “Conclusions” (including “Reflections on the artificial A(i)rchive experience” and “Limitations and Future Research”). PB was responsible for the “Introduction,” including the subsections “The Rise of Generative AI and Its Application in Cultural and Creative Industries,” “Can AI Be Creative? Drawing on the Current Debate,” and “Generative AI Tools in Design Processes: Designer–AI Collaboration.” All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments